When I began studying music production five years ago, I spent a lot of my hours working through critical listening techniques for records I found or ones that were recommended to me. The goal of this practice was identifying elements of arrangement, recording, programming, and mixing that made these particular records unique. At the time I was studying, I was introduced to immersive audio and music mixes in Dolby Atmos, but there was a strong emphasis on the technology’s immobility – making these mixes was pretty impractical since the listener needed to stay in one place relative to the specific arrangement of the speakers. Now that technology companies like Apple have implemented spatial audio to support Dolby Atmos, listeners with access to these products can consider how spatialization impacts production choices. Let’s explore this by breaking down spatial audio with AirPods and seeing how this technology expands what we know about existing critical listening techniques.

It’s important to address the distinctions of spatial audio, as the listening experience depends on if the track is stereo or mixed specifically for Dolby Atmos. The result of listening to a stereo track with spatial audio settings active is called “spatial stereo,” which mimics the events of spatial audio on stereo tracks. When using the “head-tracking” function while listening to a stereo track, moving your head will adjust the positioning of the mix in relation to the location of your listening device via sensors in the AirPods.

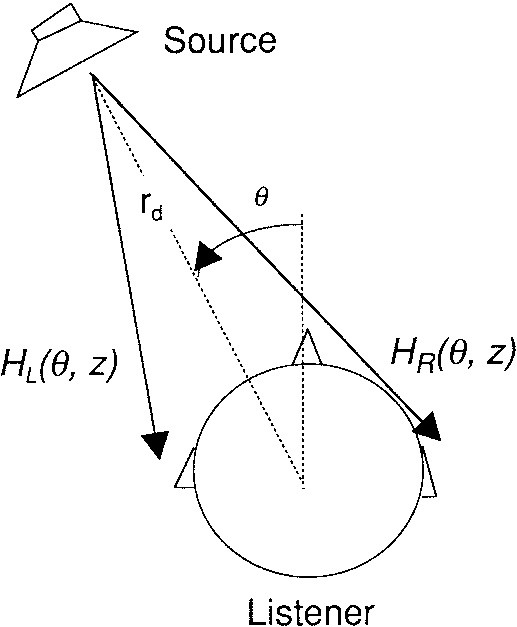

For a simplified summary of how this works, spatial audio and Dolby Atmos are both achieved with a model known as Head-Related Transfer Function (HRTF). This is a mathematical function that accounts for how we listen binaurally. It considers aspects of psychoacoustics by measuring localization cues such as interaural level and time differences, and properties of the outer ear and shape of the head. If you are interested in diving into these localization cues, you can learn more about them in my last blog.

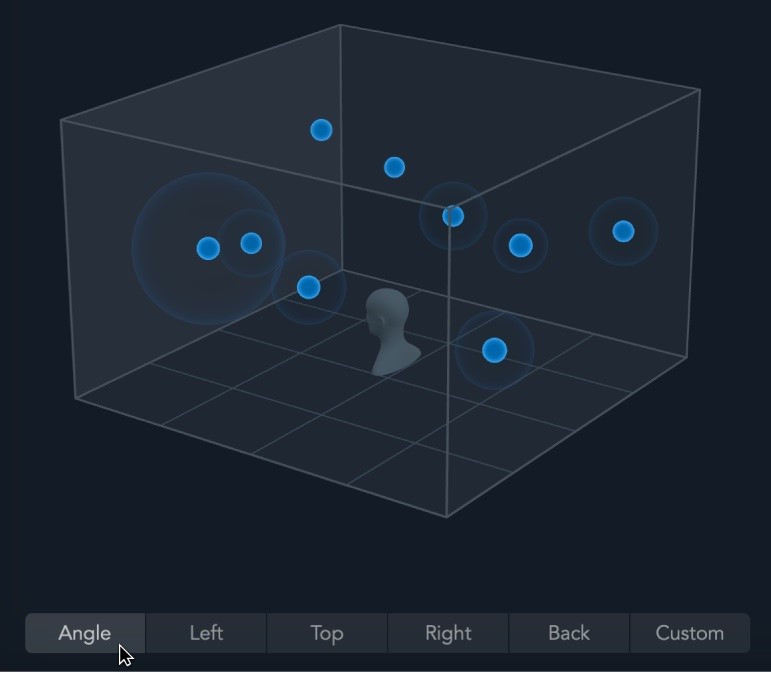

Ultimately, the listening experience of spatial stereo and Dolby Atmos mixes is different. For example, tracks that are mixed in Dolby Atmos involve different elements of the instrumentation that are placed as “objects” in a three-dimensional field and processed through a binaural renderer to create an immersive mix in headphones. Meanwhile, spatial stereo sounds like a combination of added ambience and filters and the AirPod’s sensors to form a make-shift “room” for the song. Using the head-tracking feature with spatial stereo can impact the listener’s relationship to the production of the song in a similar way to a Dolby Atmos mix, and while it doesn’t necessarily make the mix better, it does provide a lot of new information about how the record was created. I want to emphasize how we can listen differently to our favorite records in spatial audio, and not how this feature makes the mix better or worse.

For this critical listening exercise, I listened to a song mixed in Atmos through Apple Music with production that I’m familiar with: “You Know I’m No Good,” performed by Amy Winehouse, produced by Mark Ronson, and recorded by members of The Dap Kings. It’s always a good idea when listening in a new environment, in this case, an immersive environment, to listen to a song that you’re familiar with. This track was also recorded in a rather unique way as the instruments were, for the most part, not isolated in the studio, and very few dynamic microphones were used in true Daptone Records fashion. The song already has a “roomier” production sound, which actually works with the ambient experience of spatial audio.

The first change I noticed with spatial audio head tracking turned on is that the low-end frequencies are lost. The low-end response in AirPods is already pretty fragile because the speaker drivers cannot accurately replicate longer waveforms, and our collection of harmonic relationships helps us rebuild the low-end. With spatial audio, much of the filtering makes this auditory perception more difficult, and in this particular song, impacts the electric bass, kick drum, and tenor saxophone. Because of this distinction, I realized that a lot of the power from the drums isn’t necessarily coming from the low end. This makes sense because Mark Ronson recorded the drums for this record with very few microphones, focusing mostly on the kit sound and overheads. They cut through the ambience in the song and provide the punchiness and grit that matches Winehouse’s vocal attitude.

Since a lot of the frequency information and arrangement in many modern records comes from the low end, I think this is a great opportunity to explore how mid-range timbres are interacting in the song, particularly with the vocal, which in this record is the most important instrument. When I move my head around, the vocal moves away from the center of the mix and interacts more with some of the instruments that are spread out, and I noticed that it blends the most with a very ambient electric guitar, the trombone, and the trumpet. However, since those three instruments have a lot of movement and fill up a lot of the space where the vocal isn’t performing, there is more of a call-and-response connection to these instruments. This is emphasized by the similarity in timbres that I didn’t hear as clearly in the stereo mix.

Spatial audio makes a lot of the comping instruments in this song such as the piano more discernible, so I can allocate the feeling of forward movement and progression in the production to what is happening in these specific musical parts. In the stereo mix, the piano is doing the same job, but I’m able to separate it from other comping instruments in spatial audio because of how I moved my head. I turned my head to the right and centered my attention on my left ear, so I could feel the support from the piano. Furthermore, I recognized the value of time-based effects in this song as I compared the vocal reverb and ambient electric guitar in stereo and spatial audio. A lot of the reverb blended together, but the delay automation seemed to deviate from the reverb, so I could hear how the vocal delay in the chorus of the song was working more effectively on specific lyrics. I also heard variations in the depths of the reverbs, as the ambient electric guitar part was noticeably farther away from the rest of the instruments. In the stereo mix, I can distinguish the ambient guitar in the mix, but how far it is in perceptual depth is clearer in spatial audio.

Overall, I think that spatial audio is a useful tool for critical listening because it allows us to reconsider how every element of a record is working together. There is more space to explore how instrumentation and timbres are working together or not, and what their roles are. We can consider how nuances like compression and time-based effects are working to properly support the recording. Spatial audio doesn’t necessarily make every record sound better, but it’s still a tool we can learn from.