Using The Scientific Method in Assessment of System Optimization

A couple of years ago, I took a class for the first time from Jamie Anderson at Rational Acoustics where he said something that has stuck with me ever since. He said something to the effect of our job as system engineers is to make it sound the same everywhere, and it is the job of the mix engineer to make it sound “good” or “bad”.

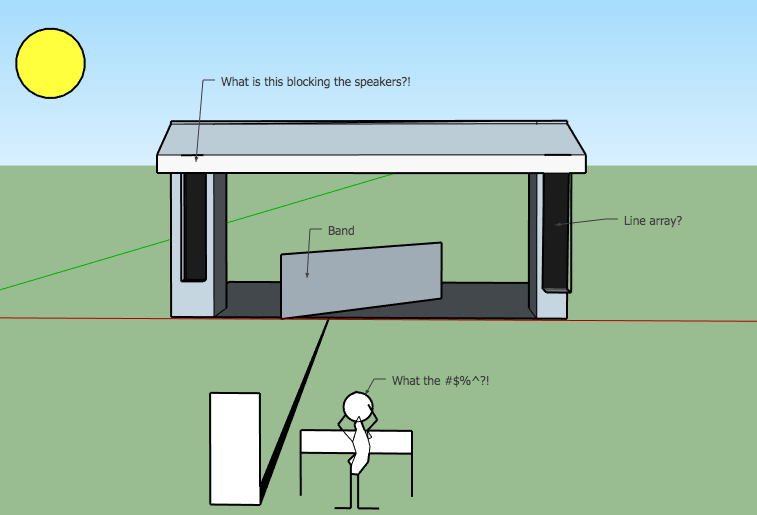

The reality in the world of live sound is that there are many variables stacked up against us. A scenic element being in the way of speaker coverage, a client that does not want to see a speaker in the first place, a speaker that has done one too many gigs and decides that today is the day for one driver to die during load-in or any other myriad of things that can stand in the way of the ultimate goal: a verified, calibrated sound system.

One distinction that must be made before beginning the discussion of system optimization is that we must draw a line here and make all intentions clear: what is our role at this gig? Are you just performing the tasks of the systems engineer? Are you the systems engineer and FOH mix engineer? Are you the tour manager as well and work directly with the artist’s manager? Why does this matter, you may ask? The fact of the matter is that when it comes down to making final evaluations on the system, there are going to be executive decisions that will need to be made, especially in moments of triage. Having clearly defined what one’s role at the gig is will help in making these decisions when the clock is ticking away.

So in this context, we are going to discuss the decisions of system optimization from the point of the systems engineer. We have decided that the most important task of our gig is to make sure that everyone in the audience is having the same show as the person mixing at front-of-house. I’ve always thought of this as a comparison to a painter and a blank canvas. It is the mix engineer’s job to paint the picture for the audience to hear, it is our job as system engineers to make sure the painting sounds the same every day by providing the same blank canvas.

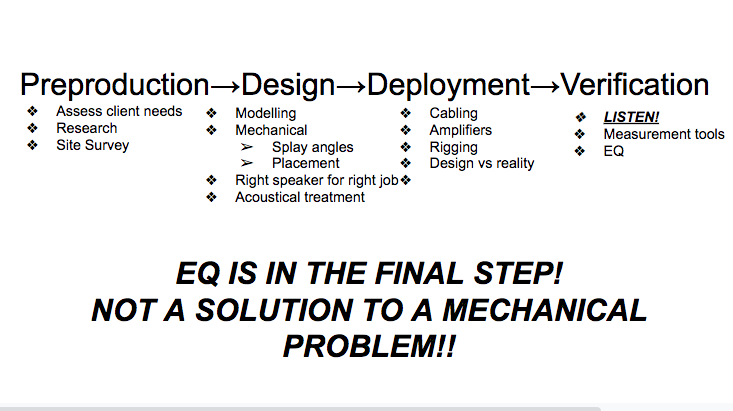

The scientific method teaches the concept of control with independent and dependent variables. We have an objective that we wish to achieve, we assess our variables in each scenario to come up with a hypothesis of what we believe will happen. Then we execute a procedure, controlling the variables we can, and analyze the results given the tools at hand to draw conclusions and determine whether we have achieved our objective. Recall that an independent variable is a factor that remains the same in an experiment, while a dependent variable is the component that you manipulate and observe the results. In the production world, these terms can have a variety of implications. It is an unfortunate, commonly held belief that system optimization starts at the EQ stage when really there are so many steps before that. If there is a column in front of a hang of speakers, no EQ in the world is going to make them sound like they are not shadowed behind a column.

Now everybody take a deep breath in and say, “EQ is not the solution to a mechanical problem.” And breathe out…

Let’s start with preproduction. It is time to assess our first round of variables. What are the limitations of the venue? Trim height? Rigging limitations? What are the limitations proposed by the client? Maybe there is another element to the show that necessitates the PA being placed in a certain position over another; maybe the client doesn’t want to see speakers at all. We must ask our technical brains and our career paths in each scenario, what can we change and what can we not change? Note that it will not always be the same in every circumstance. In one scenario, we may be able to convince the client to let us put the PA anywhere we want, making it a dependent variable. In another situation, for the sake of our gig, we must accept that the PA will not move or that the low steel of the roof is a bleak 35 feet in the air, and thus we face an independent variable.

After assessing these first sets of variables, we can now move into the next phase and look at our system design. Again, say it with me, “EQ is not the solution to a mechanical problem.” We must assess our variables again in this next phase of the optimization process. We have been given the technical rider of the venue that we are going to be at and maybe due to budgetary restraints we cannot change the PA: independent variable. Perhaps we are carrying our own PA and thus have control over the design with limitations from the venue: dependent variable forms, but with caveats. Let’s look deeper into this particular scenario and ask ourselves: as engineers building our design, what do we have control over now?

The first step lies in what speaker we choose for the job. Given the ultimate design control scenario where we get the luxury to pick and choose the loudspeakers we get to use in our design, different directivity designs will lend themselves better in one scenario versus another. A point source has just as much validity as the deployment of a line array depending on the situation. For a small audience of 150 people with a jazz band, a point source speaker over a sub may be more valid than showing up with a 12 box line array that necessitates a rigging call to fly from the ceiling. But even in this scenario, there are caveats in our delicate weighing of variables. Where are those 150 people going to be? Are we in a ballroom or a theater? Even the evaluation of our choices on what box to choose for a design are as varied as deciding what type of canvas we wish to use for the mix engineer’s painting.

So let’s create a scenario: let’s say we are doing an arena show and the design has been established with a set number of boxes for daily deployment with an agreed-upon design by the production team. Even the design is pretty much cut and paste in terms of rigging points, but we have varying limitations to trim height due to high and low steel of the venue. What variables do we now have control over? We still have a decent amount of control over trim height up to a (literal) limit of the motor, but we also have control over the vertical directivity of our (let’s make the design decision for the purpose of discussion) line array. There is a hidden assumption here that is often under-represented when talking about system designs.

A friend and colleague of mine, Sully (Chris) Sullivan once pointed out to me that the hidden design assumption that we often make as system engineers, but don’t necessarily acknowledge, is that we assume that the loudspeaker manufacturer has actually achieved the horizontal coverage dictated by technical specifications. This made me reconsider the things I take for granted in a given system. In our design, we choose to use Manufacturer X’s 120-degree line source element. They have established in their technical specs that there is a measurable point at 60 degrees off-axis (total 120-degree coverage) where the polar response drops 6 dB. We can take our measurement microphone and check that the response is what we think it is, but if it isn’t what really are our options? Perhaps we have a manufacturer defect or a blown driver somewhere, but unless we change the physical parameters of the loudspeaker, this is a variable that we put in the trust of the manufacturers. So what do we have control over? He pointed out to me that our decision choices lie in the manipulation of the vertical.

Entire books and papers can and have been written about how we can control the vertical coverage of our loudspeaker arrays, but certain factors remain consistent throughout. Inter-element angles, or splay angles, let us control the summation of elements within an array. Site angle and trim height let us control the geometric relationship of the source to the audience and thus affect the spread of SPL over distance. Azimuth also gives us geometric control of the directivity pattern of the entire array along a horizontal dispersion pattern. Note that this is a distinction from the horizontal pattern control of the frequency response radiating from the enclosure, of which we have handed responsibility over to the manufacturer. Fortunately, the myriad of loudspeaker prediction software available from modern manufacturers has given the modern system engineer an unprecedented level of ability to assess these parameters before a single speaker goes up into the air.

At this point, we have made a lot of decisions on the design of our system and weighed the variables along every step of the way to draw out our procedure for the system deployment. It is now time to analyze our results and verify that what we thought was going to happen did or did not happen. Here we introduce our tools to verify our procedure in a two step-process of mechanical then acoustical verification. First, we use tools such as protractors and laser inclinometers as a means of collecting data to assess whether we have achieved our mechanical design goal. For example, our model says we need a site angle of 2 degrees to achieve this result so we verify with the laser inclinometer that we got there. Once we have assessed that we made our design’s mechanical goals, we must analyze the acoustical results.

Laser inclinometers are just one example of a tool we can use to verify the mechanical actualization of a design

.

It is here only at this stage that we are finally introducing the examination software to analyze the response of our system. After examining our role at the gig, the criteria involved in pre-production, choosing design elements appropriate for the task, and verifying their deployment, only now can move into the realm of analysis software to see if all those goals were met. We can utilize dual-channel measurement software to take transfer functions at different stages of the input and output of our system to verify that our design goals have been met, but more importantly to see if they have not been met and why. This is where our ability to critically interpret the data comes in to play. By evaluating impulse response data, dual-channel FFT (Fast-Fourier Transform) functions, and the coherence of our gathered data we can make an assessment of how our design has been achieved in the acoustical and electronic realm.

What’s interesting to me is that often the discussion of system optimization starts here. In fact, as we have seen, the process begins as early as the pre-production stage when talking with different departments and the client, and even when asking ourselves what our role is at the gig. The final analysis of any design comes down to the tool that we always carry with us: our ears. Our ears are the final arbiters after our evaluation of acoustical and mechanical variables, and are used along every step of our design path along with our trusty use of “common sense.” In the end, our careful assessment of variables leads us to utilize the power of the scientific method to make educated decisions to work towards our end goal: the blank canvas, ready to be painted.

Big thanks to the following for letting me reference them in this article: Jamie Anderson at Rational Acoustics, Sully (Chris) Sullivan, and Alignarray (www.alignarray.com)