Hombre cohete

Una de las cosas que más añoro y por la que me dedique hacer sonido en vivo es el constante movimiento, conocer gente y viajar por el mundo… Tener un llamado y una rutina en tour donde viajas muchísimo y pasas por tantos aeropuertos, subes y bajas de aviones constantemente, tienes cambios de horario todo el tiempo… Si, esas son algunas cosas que me gustan, pero para algunas otras profesiones, el hablar de cambios de horarios, vuelos y el mundo, tiene un significado mucho más literal…

Cuando hablamos de prepararnos para “el show”, nos llena de emoción y adrenalina, sentir la energía de tantas personas reunidas esperando ver un espectáculo, pero esta misma adrenalina, la sienten otras personas de una forma diferente… Imaginemos el escenario del ingeniero que maneja el sonido y la comunicación clave entre en el espacio y la tierra, que su “show” es ver una densa nube de vapor junto con una gran explosión y descarga de muchos decibeles que emite la nave mientras despega hacia el espacio exterior, ufff, no tengo palabras para imaginar esa sensación, es por eso que hice contacto con Alexandria Perryman, Ingeniera de sonido de la NASA …

Así que viajemos juntos para entender un poco el sonido y transmisión al espacio exterior.

- Cuenta regresiva T minus 10 sec… 9, 8, 7, 6, 5, 4, 3, 2, 1, 0!!!

Hace poco, televisaron el primer lanzamiento privado hacia el espacio que salió desde el Centro Espacial Kennedy, en Cabo Cañaveral, ahí, esta la Plataforma de Lanzamiento 39 de donde han despegado varias naves, entre ellas, el Apollo 11 (Que llevó al humano a la Luna), hasta el día de hoy ha sido uno de los principales puntos de conexión hacia el espacio.

La comunicación entre la tripulación de la estación espacial y el equipo de apoyo en la tierra, son fundamentales para el éxito de la misión. El poder transmitir un mensaje verbal en el espacio es crucial para la mayoría de las actividades de los astronautas, desde hacer caminatas espaciales, realizar experimentos, entablar conversaciones familiares y algo espectacular, poder transmitir información a todos los seres humanos en la tierra,

¿Pero como es que se logra esto?

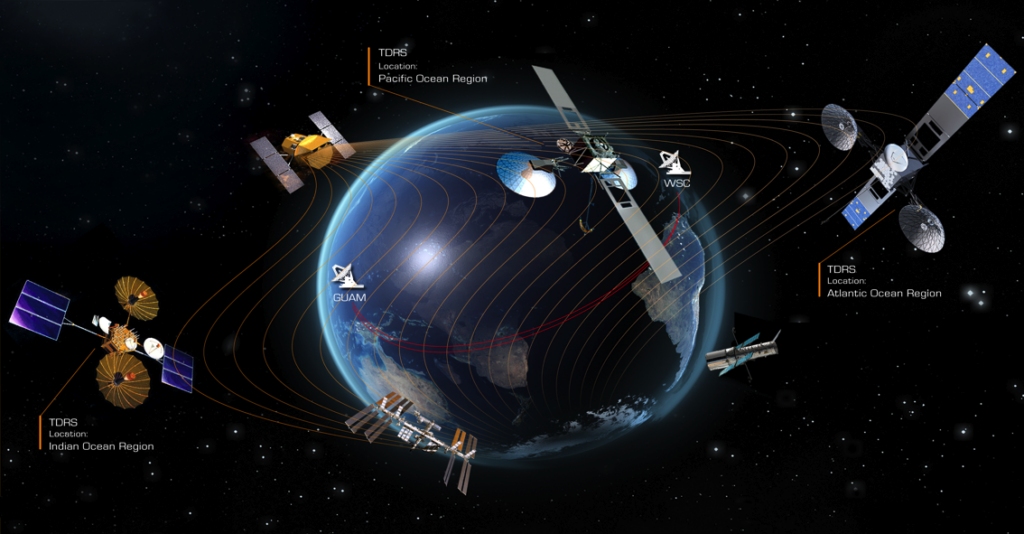

Toda esta red de transmisión viaja hasta las personas que orbitan a más de 250 millas sobre la tierra gracias a una red de satélites de comunicación y antenas terrestres, todo esto forma parte de la Red Espacial de la NASA.

– de fondo… “Rocket Man” – Elton John,

canción preferida por algunos astronautas en sus viajes –

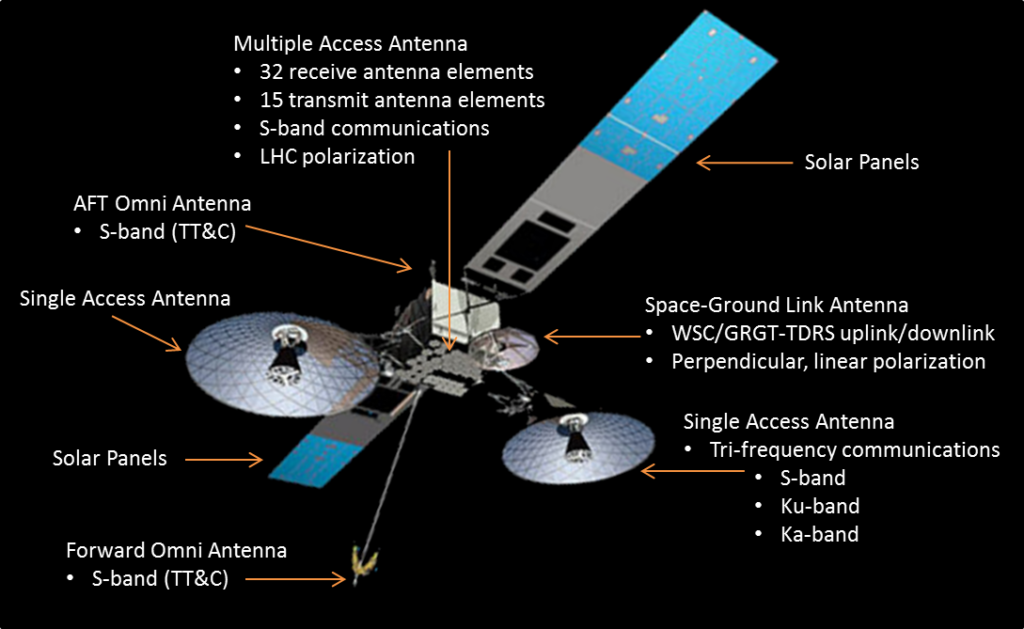

Un gran número de satélites de seguimiento y retransmisión de datos (Tracking and Data Relay Satellites – TDRS) forma la red de la base espacial, estos grandes aparatos, son y funcionan como torres de telefonía celular en el espacio, y se encuentran ubicados en una órbita geosíncrona a más de 22,000 millas sobre la Tierra, esto permite que la estación espacial se contacte a uno de los satélites desde cualquier lugar de su órbita. A medida que los satélites de comunicaciones viajan alrededor de la Tierra, estos permanecen por encima del mismo punto relativo en el suelo a medida que el planeta gira.

Los satélites de seguimiento y retransmisión de datos manejan información de voz y video en ¡tiempo real!, esto es, si un astronauta que esta en la estación espacial quisiera transmitir datos al Control de Misión en el Centro Espacial Johnson de la NASA, lo primero es; usar la computadora que esta a bordo de la estación para convertir los datos en una señal de radiofrecuencia, una antena en la estación transmite señal a la TDRS y luego ahí mismo dirige la señal al centro de pruebas de “White Sands” en donde se realizan pruebas y análisis de datos. A continuación, los teléfonos fijos envían la señal a Houston, y los sistemas informáticos en tierra convierten la señal de radio en datos legibles, si el Control de la Misión desea enviar datos de vuelta, el proceso se repite en dirección opuesta transmitiendo desde el centro de pruebas a TDRS y de ahí a la estación espacial. Lo increíble de esto es que el tiempo que se tarda en procesar este trayecto y conversión de datos es de muy pocos milisegundos por lo que no se percibe un retraso notable en la transmisión.

Toda esta comunicación es vital para el conocimiento y descubrimiento de muchos temas como el comportamiento de la órbita terrestre para que los astronautas realicen experimentos, proporcionando información valiosa en los campos de la física, la biología, la astronomía, la meteorología entre muchos otros. La Red Espacial entrega estos tan especiales y únicos datos científicos a la Tierra.

– “Here Comes the Sun” – The Beatles – Canción preferida por astronautas…

Platicando con Alexandria, comenta que antes de que existiera la Red Espacial, los astronautas y las naves espaciales de la NASA, solo podrían comunicarse con el equipo de apoyo en la tierra cuando estaban a la vista de una antena en el suelo, esto solo permitía comunicaciones de un poco menos de quince minutos cada hora y media aproximadamente. La comunicación en esos tiempos era muy lenta y complicada, pero actualmente, la Red Espacial, brinda cobertura de comunicaciones casi continua todos los días y eso es sumamente importante para el desarrollo y descubrimiento en el espacio.

En el año 2014, se probó una nueva tecnología de transmisión de datos “OPALS”, esto ha demostrado que las comunicaciones láser pueden acelerar el flujo de información entre la Tierra y el espacio, en comparación con las señales de radio, además OPALS ha recopilado una enorme cantidad de datos para avanzar en la ciencia enviando lásers a través de la atmósfera. Aunque los ingenieros de sonido encargados de la comunicación terrestre hacia los astronautas no la utilizan aún.

- Sabias que la tripulación de Gemini 6 comenzó la tradición en 1965, despertando con la canción de “Hello Dolly” de Jack Jones-

Como ingeniera de sonido, me gustaría saber cual es el flujo de señal que utiliza la ingeniera de audio que trabajan en la NASA, y esta fue la respuesta…

Todo el ruteo de señal y mezclas se realiza desde una a consola System 5 Euphonix de AVID y cuando se manda señal o datos desde la tierra hacia el espacio, pasa primero a la consola de audio que a su vez se envía a un codificador digital de señal por medio de radio frecuencias que manda esta misma información hacia un decodificador que se encuentra en un satélite en el espacio para que la tripulación pueda estar en comunicación con la tierra.

Como lo mencionamos en un inicio, las Radio Frecuencias se utilizan hasta la fecha porque son más sencillas de captar además que transmiten mucho mas claro el sonido. En caso de que los astronautas realicen viajes más profundos al espacio, entonces se cambia la forma de transmisión enviando señales directamente a satélites especializados que mandan datos codificados entre ellos, en esta forma, existe un poco más de retraso pero no se pierde calidad del sonido.

Los astronautas estabilizan la nave para llegar la estación espacial internacional, observan Tremor (el dinosaurio) que sirvió como indicador de gravedad cero –

Algo que todo el mundo presenciamos fue cuando los astronautas Bob Behnken y Doug Hurley que viajaron en la nave espacial privada Dragon, llegaron a la Estación espacial recibidos por los astronautas Chris Cassidy, Anatoly Ivanishin and Ivan Vagner, fue entonces que transmitieron en vivo unas palabras utilizando un micrófono inalámbrico conectado directamente a una cámara que envió la señal a un satélite realizando el flujo de señal como lo explicamos anteriormente, Alex detrás de la consola haciendo Broadcast hacia todo el planta, pudo sentir un poco de retraso (más de lo normal) pero no afecto la sincronía entre el video y el audio así como la calidad del sonido, tuvo ¡buen show! Además entre risas me dijo que se sintió feliz por los cursos básicos que le dio a los astronautas para poder manejar el equipo audiovisual en el espacio.

He podido sentir la emoción de operar una misión espacial a través de las palabras y vivencias de una ingeniera de sonido que enfatiza la importancia de ser el lazo de unión entre el espacio y el planeta, transmitir la pasión, la tecnología y los descubrimientos que marcan el futuro de nuestro desarrollo tecnológico como seres humanos. No me siento tan lejana a esta sensación aunque literalmente vives en otro mundo.

Para aquellas personas que no estén seguras de que camino tomar o como obtener este tipo de oportunidades y trabajos, en tours o en diferentes áreas, les comparto que en el caso de Alex, aplico a un trabajo anunciado públicamente a través de redes profesionales en donde no decía que trabajaría para la NASA y se entero hasta que llego al lugar… Esto demuestra entre muchos más ejemplos que no hay que juzgar sino buscar y explorar, cuando menos lo pienses llegarán estas oportunidades… mientras prepárate para que cuando las enfrentes, estés siempre mejor preparado(a).

Agradezco mucho el tiempo de charla con Alexandria Perryman y Karrie Keyes por la grandiosa introducción.

“Este es un pequeño paso para (el) hombre, un gran salto para la humanidad” primeras palabras de Neil Armstrong en la luna….