Dear Everyone, you are not alone.

**TRIGGER WARNING: This blog contains personal content surrounding issues of mental health including depression and anxiety, and the Covid-19 pandemic. Reader discretion is advised.**

The alarm on my phone went off at 6:30 a.m.

I rolled out of my bunk, carefully trying to make as little noise as possible as I gathered my backpack, clothes, and tool bag before exiting the bus.

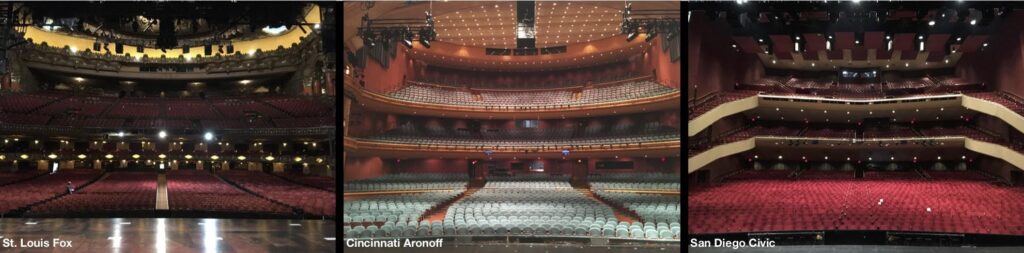

The morning air felt cool against my face as I looked around me trying to orient myself in the direction of the loading dock to the arena. Were we in New York? Ohio? Pennsylvania? In the morning before coffee, those details were difficult to remember.

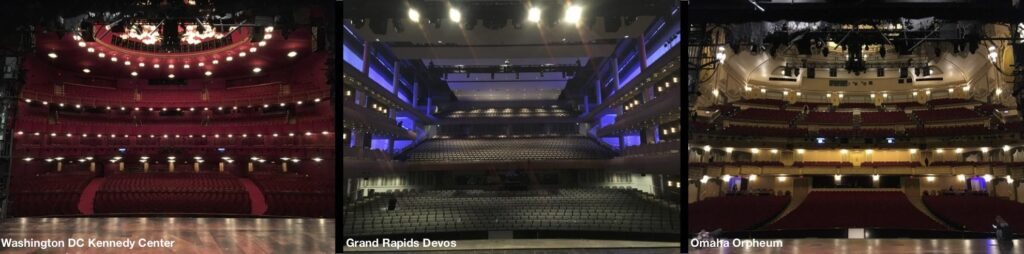

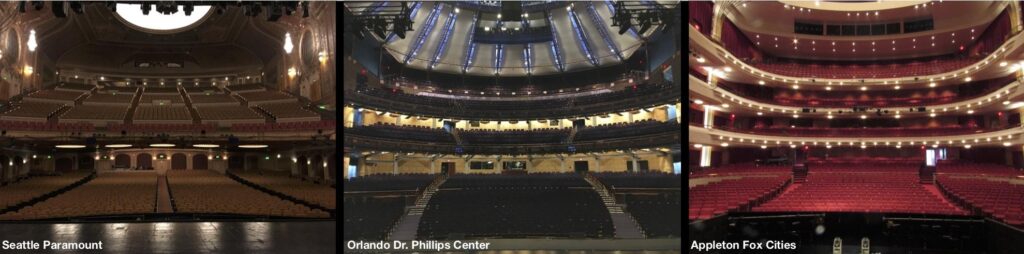

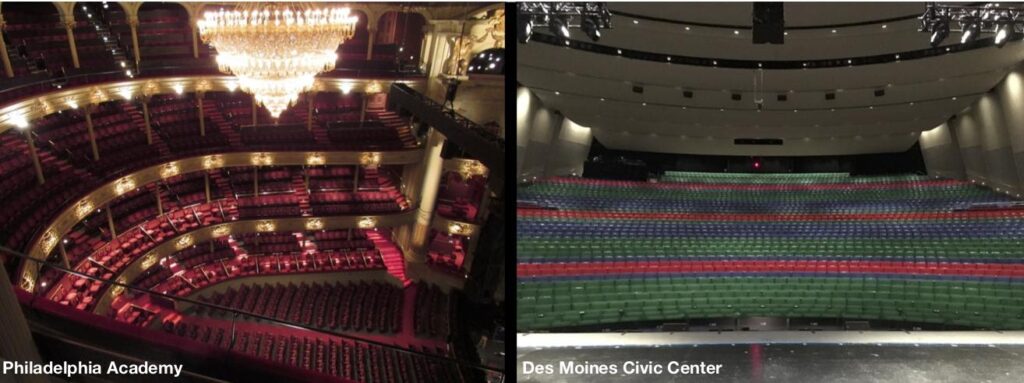

Passed the elephant door, the arena sprawled out before me, empty and suspensefully silent. I looked up with a mixed sense of awe and critical analysis as I noted the three tiers of the arena, the red seats forming distinct geometrical shapes between each section. As I made my way out to the middle of the deceivingly large room, I looked toward the ground in hopes of finding that tell-tale button marking the middle of the room, if I was lucky.

As I set up my tripod, I heard the footsteps of the rigging team as they began stretching out their yellow measuring tapes across the cement floor. The clapping of their feet echoed in the room and soon the sound of their voices calling out distances joined the chorus in the reverb tails.

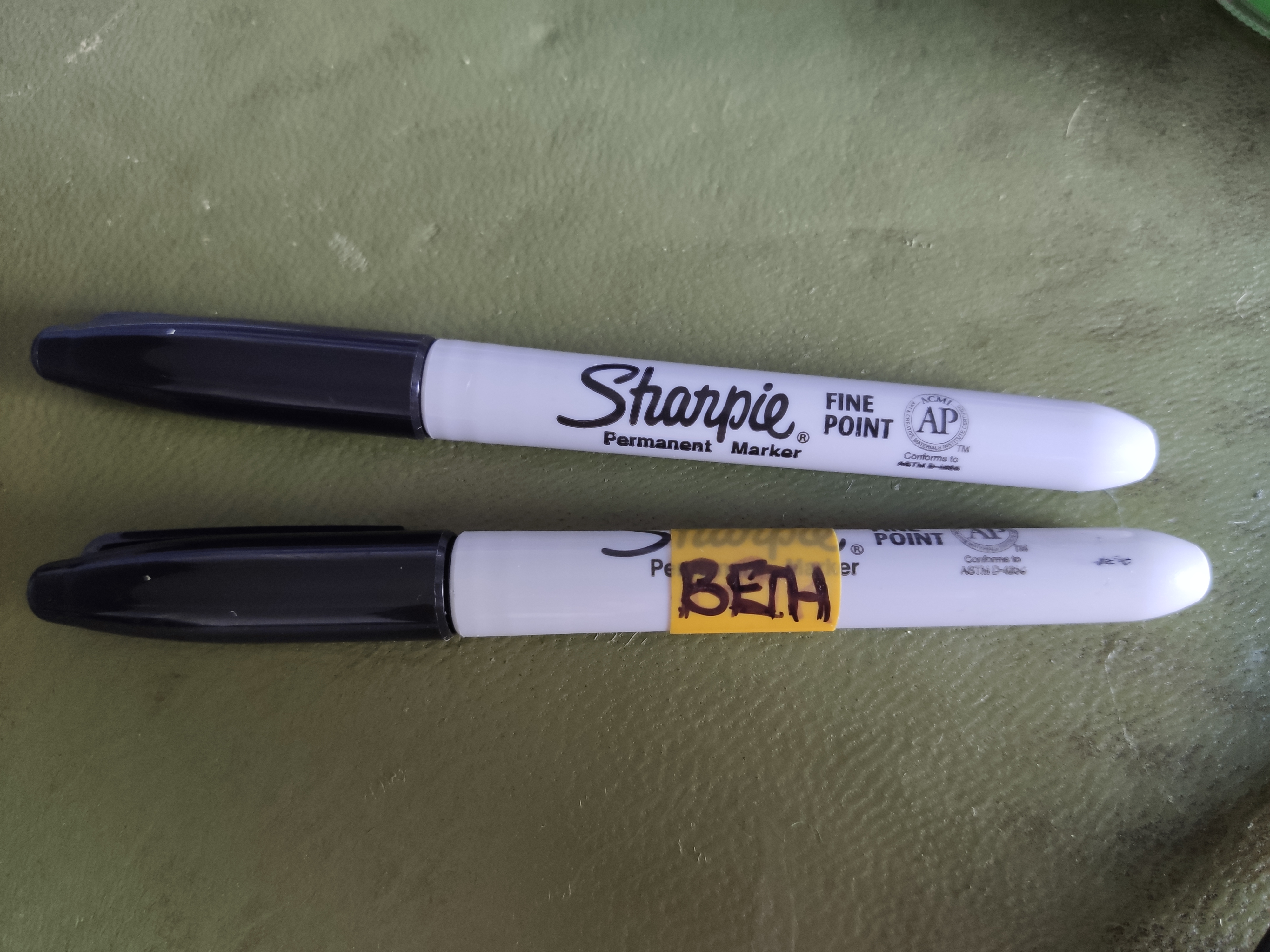

I turned on my laser and pulled out my notepad, the pen tucked in my hair as I aimed for the first measurement.

Then I woke up.

Up above me, all I could see was the white air-tile of the basement ceiling while the mini-fridge hummed in the corner of the room.

For a few seconds, or maybe it was a full minute, I had absolutely no idea where I was.

I wanted to scream.

I lay in bed for what could have been 15 minutes or an hour, telling myself I had to get out of bed. I couldn’t just lay here. I had to do something. Get up. Get UP.

Eventually, I made my way upstairs and put on a pot of water for coffee. When I opened my phone and opened Facebook, I saw a status update from a friend about a friend of a friend who had passed away. My heart sank. I remembered doing a load-in with that person. Years ago, at a corporate event in another city, in another lifetime. They didn’t post details on what had happened to them. Frankly, it wasn’t anyone’s business, but the family and those closest to them. Yet my heart felt heavy.

Six months ago, or maybe more, time had ceased to have any tangible meaning at this point, I had been sitting in a restaurant in Northern California when the artists told the whole tour that we were all going home. Tomorrow. Like a series of ill-fated dominoes, events were canceling one-by-one across the country and across the world. Before I knew it, I was back in my storage unit at my best friend’s house, trying to shove aside the boxes I had packed up 4 or 5 months earlier to make room for an inflatable mattress so I had somewhere to sleep. I hadn’t really expected to be “home” yet so I hadn’t really come up with a plan as to what I was going to do.

Maybe I’ll go camping for the next month or so. Try to get some time to think. I loved nature and being out in the trees always made me feel better about everything, so maybe that was the thing to do. Every day I looked at the local newspaper’s report of the number of Covid-19 cases in California. It started out in the double digits. The next day it was in the triple digits. Then it grew again. And again. Every day the numbers grew bigger and notices of business closing and areas being restricted filled the pages and notifications across the Internet.

Fast-forward and the next thing I knew, I was packing all my possessions into a U-Haul trailer and driving across the country to be with my sister in Illinois. She had my baby niece a little over a year ago, so I figured the best use of my time would be to spend time with my family while I could.

I was somewhere driving across Kansas when the reality of what was happening hit me. As someone who loved making lists and planning out everything from their packing lists to their hopes and dreams in life, I—for once—literally had no idea what I was doing. This seemed like the best idea I could think of at the time.

Fast-forward and I was sitting on the phone in the basement of my sister’s house in the room she had graciously fabricated for me out of sectioned-off tapestries. I looked at the timestamp on my phone for how long I had been on hold with the Unemployment Office. Two hours and thirty minutes. It took twenty calls in a row to try and get through to someone at the California Employment Development Department. At the three-hour mark, the line disconnected. I just looked down at my phone.

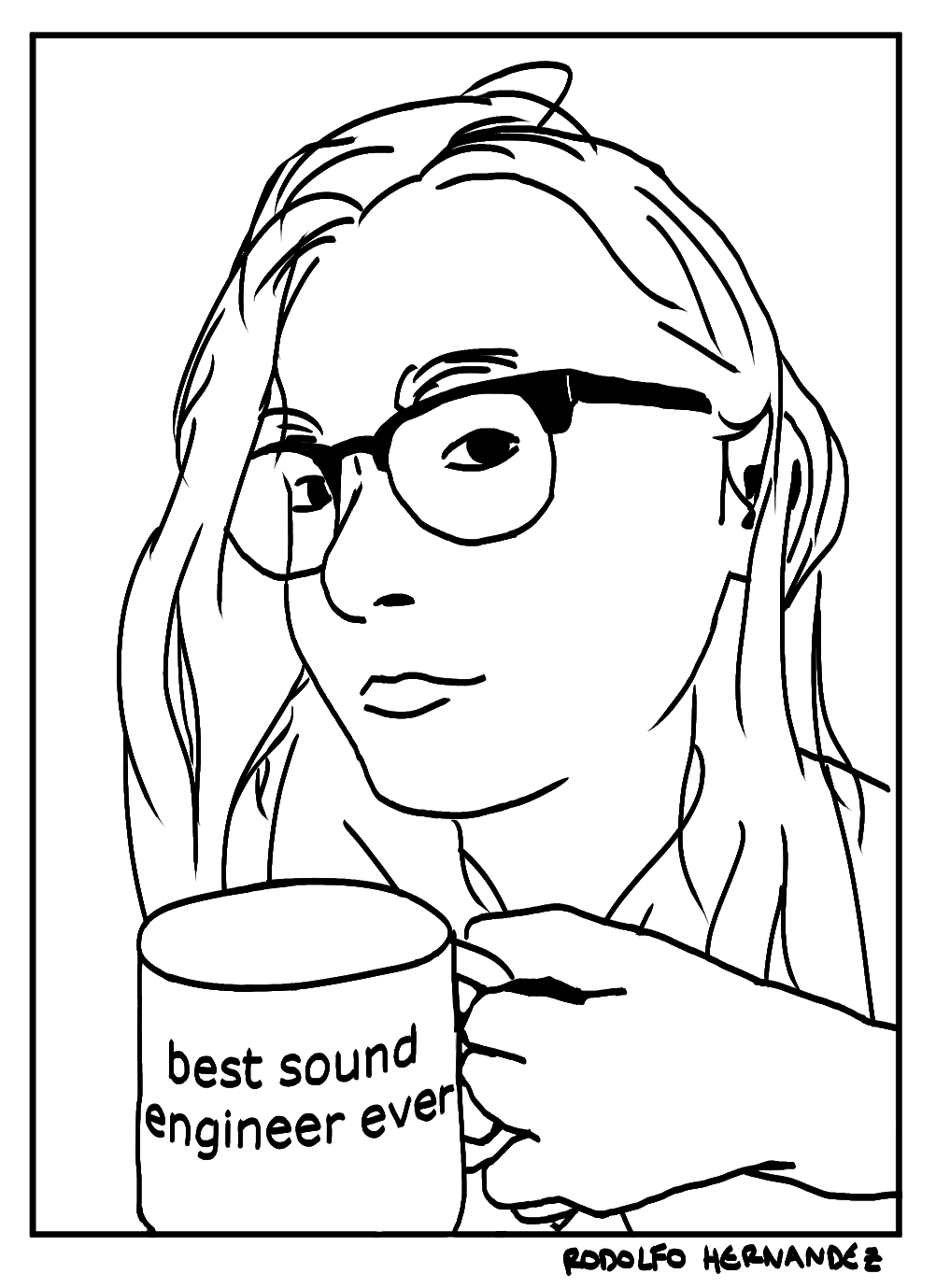

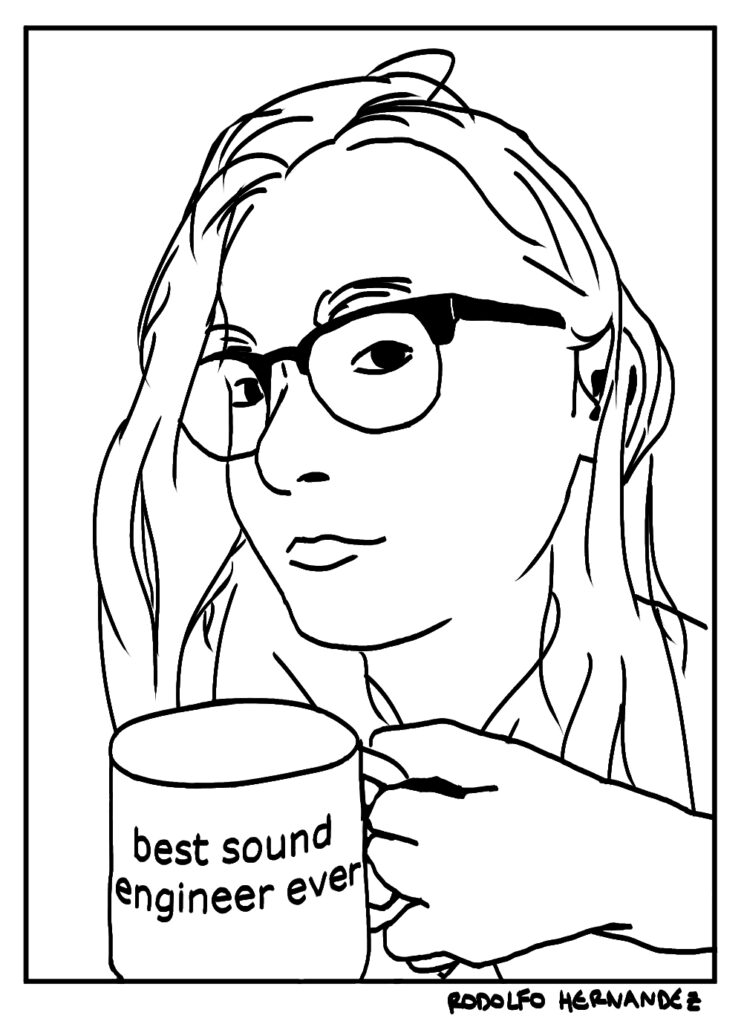

I remember one Christmas when I was with my dad’s side of the family at dinner, I tried to explain what I do to them.

“So you are a DJ, then?” my aunt asked enthusiastically, believing that she had finally gotten it right.

“No,” I said.

“Do you play with the band?” my uncle asked.

“No, I’m the person who tries to make sure everyone in the audience can hear the band,” I tried to laugh.

Everyone laughed that sort of half-laugh when you try to pretend you get the joke, but you don’t actually get it.

Across my social media feeds, friends, colleagues, acquaintances, and everyone in between, were all sharing updates of how they had to get “real jobs”, how they couldn’t get through to unemployment or their state had completely failed to get them any unemployment at all, how they were angry, desperate, and how they needed to feed their families. Leaders in the industry grew from the motivation of trying to speak out on behalf of the live events industry to the government, pleading for financial relief for businesses, venues, individuals, and more, and my feeds flooded with initiatives and campaigns for awareness of the plight of the live events industry.

Yet when I talked to people who were not in the industry, they seemed to have no idea that the live events sector had been affected at all. Worse yet, I realized more and more that so few people had any idea of what people in the live events industry actually do. Organizations struggled to get news channels to do exposés on the subject, and perhaps it was because there were so many people across every sector of every industry that were struggling. In one conversation with a friend, I had explained that there were nearly 100 people on a tour that I had worked on between the production, tech crew, artist’s tech crew, everyone. They couldn’t believe so many people were working behind the scenes at one concert.

Yet the more I talked about my job and the more time that passed, the more I felt like I was talking about a dream. This fear grew inside me that there was no end in sight to all this and the stories started to repeat themselves and it started to feel like these were stories of what had been, not what was. It was becoming increasingly difficult to concentrate when talking to people about “regular” things in our daily lives because it was not work. Talking about the weather was not talking about rigging plots or truckloads, so my brain just refused to focus on it. Yet I couldn’t stop thinking about the industry: watching webinars, learning new things because I just wanted so desperately to go back to my career that I fabricated schedules and deadlines around other obligations to feel like work was still there.

Then the thought that underpinned all this rose up like a monster from the sea:

Who am I without my job?

I read an article Dave Grohl wrote [1] about performing and playing music on-stage for people, how there was nothing like that feeling in the whole world. I think he hit on something that, in effect, is really indescribable to anyone who has not worked in the live events world. There was a feeling unlike any other of standing in a room with tens of thousands of people screaming at deafening levels. There was a feeling unlike any other of standing alone in a room listening to a PA and crafting it to sound the way you wanted it to. There was a feeling unlike any other of hearing motors running in the morning while pulling a snake across an arena floor. There was a feeling unlike any other of complete, utter exhaustion riding a bus in the morning to the next load-in after doing 4, 5, 6, however many gigs in a row. I tried to explain these feelings to my friends and family who listened with compassion, but I couldn’t help but feel that sometimes they were just pretending to get the joke.

Days, weeks, months floated by and the more time passed, the more I felt like I was floating in a dream. This was a bad dream that I would wake up from. It had to be. Then when I came to reality and realized that this was not a dream, that this was where I was in my life now, it felt like my brain and the entire fabric of my being was splitting in two. It was not unbeknownst to me how fortunate I was with my sister taking me in. Every morning I tried to say 5 things I was grateful for to keep my spirits up and my sister was always one of them.

The painful irony was that I had stopped going to therapy in January 2020 because I felt I had gotten to an OK point in my life where I was good for now. I had gotten where I needed to for the time being and I could shelve all the other stuff for now until I had time to address them. Then suddenly I had all the time in the world and while shut down in quarantine, all those things in my brain I told myself I would deal with later…Well, now I had no other choice than to deal with them, and really this all intersected with the question at hand of who was I without my job.

And I don’t think I was alone

The thing people don’t tell you about working in the industry is the social toll it takes on your life and soul. The things you give up and the parts of yourself you give up to make it a full-time gig. Yet there is this mentality of toughing it through because there are 3,000 other people waiting in line to take your spot and if you falter for even just one step, you could be gone and replaced just as easily. Organizations focusing on mental health in the industry started to arise from the pandemic because, in fact, it wasn’t just me. There are many people who struggle to find that balance of life and work let alone when there is a global health crisis at hand. All this should make one feel less alone, and to some extent it does. The truth is that the journey towards finding yourself is, as you would imagine, something each person has to do for themself. And my reality was that despite all the sacrifices needed for this job, all I wanted to do was run back to it as fast as I could.

Without my work, it felt like a huge hole was missing from my entire being. That sense of being in a dream pervaded my every waking moment and even in my dreams, I dreamt of work to the point where I had to take sleeping aids just so I would stop thinking about it in my dreams too. I found myself at this strange place in my life where I reunited myself with hobbies that I previously cast aside for touring life and trying to appreciate what happiness they could offer. More webinars and industry discussions popped up about “pivoting” into new industries or fields and in some of these, you could physically see the pain in the interviewees’ faces as they tried to discuss how they had made their way in another field.

One day I was playing with my baby niece and I told her we had to stop playing to go do something, but we would come back to playing later. She just looked at me in utter bewilderment and said, “No! No! No!” Then I remembered that small children have no concept of “now” versus “later”. Everything literally is in the “now” for them. It struck me as something very profound that my niece lived completely in the moment. Everything was a move from one activity to the next, always moving forward. So with much effort and pushback against every fiber of my future-thinking self, I just stopped trying to think of anything further than the next day ahead of me. Just move one foot in front of the other and be grateful every day that I am here in what’s happening at this moment.

Now with the vaccination programs here in the United States and the rumblings of movement trickling across the grapevine, it feels like for the first time in more than a year that there is hope on the horizon. There is a part of me that is so desperate for it to be true and part of me that is suspiciously wary of it being true. Like seeing the carrot on the ground, but being very aware of the fact there is a string attached to it that can easily pull the carrot away from you once more.

There is a hard road ahead and a trepidatious one, at that. Yet after months and months of complete uncertainty, there is something to be said about having hope that things will return to a new type of “normal”. Because “normal” would imply that we would return to how things were before 2020. I believe that there is good change and reflection that came in the pause of the pandemic that we should not revert back from: a collective reflection on who we are, whether we wanted to address it to ourselves or not.

There is a hard road ahead and a trepidatious one, at that. Yet after months and months of complete uncertainty, there is something to be said about having hope that things will return to a new type of “normal”. Because “normal” would imply that we would return to how things were before 2020. I believe that there is good change and reflection that came in the pause of the pandemic that we should not revert back from: a collective reflection on who we are, whether we wanted to address it to ourselves or not.

What will happen from this point moving forward is anyone’s gamble, but I always like to think that growth doesn’t come from being comfortable. So with one foot in front of the other, we move forward into this next phase of time. And like another phrase that seems to come up over and over again, “Well, we will cross that bridge when we come to it.”

References:

[1]https://www.theatlantic.com/culture/archive/2020/05/dave-grohl-irreplaceable-thrill-rock-show/611113/