As you can tell from the title, and the use of the first person singular personal pronoun, this blog is personal – but aren’t they always? – but maybe a little confessional too.

It’s the end of February and we’re looking forward to March with its winds: March winds followed by April showers, at least that’s what we say in the UK. In fact, they’ve been a bit early and a little ferocious this last week with ‘Storm Eunice’ gusting at over 100mph. I mention this since maybe March might just turn out better than expected.

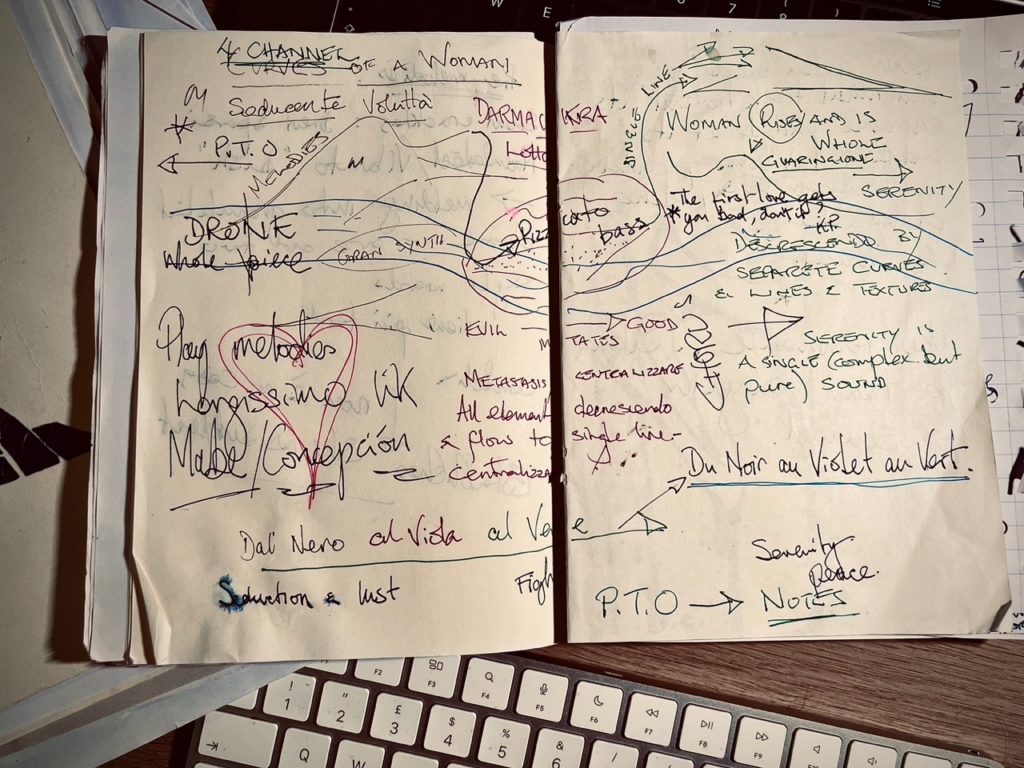

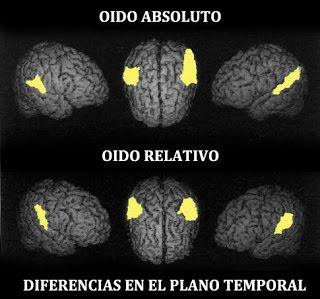

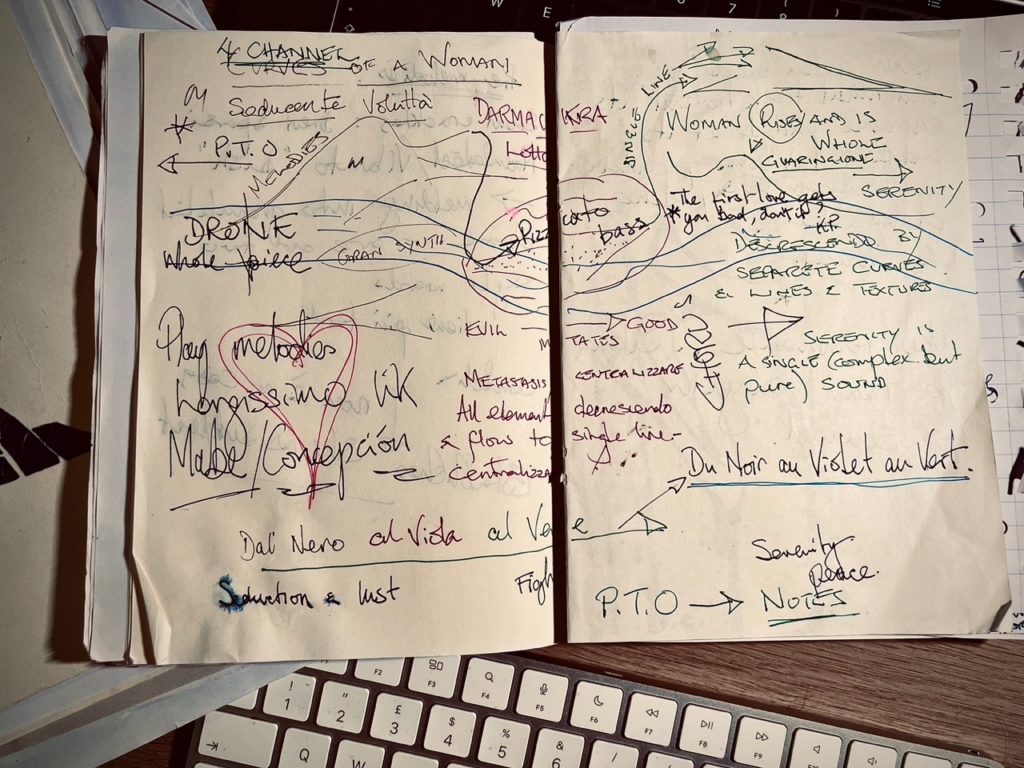

After a long wait, the Giuseppe Verdi Conservatorio in Turin finally has its new electroacoustic composition teacher, and my first lesson is on March 1st. So… I’d better get moving. I was persuaded to scrap, or at least set aside for now my previous idea of an electroacoustic piece with cello melodies based on the bodily curves of my girlfriend, the Mexican artist who eventually broke my heart. That was eight months ago and as my title suggests, these days I still occasionally cry over lost love and what might have been, but always on a Sunday. The melodies had already been written, transcriptions of her side, shoulder, and neck seen from behind with counter melodies taken from shadows and lines on her back, to include a tattoo and I had already sketched in some ideas for granular and dusty effervescence electronics to represent shadows: where they are positioned, their density, and the amount of space occupied.

I had also begun to think about extending the sketch into a three-movement piece with the tattoo representing the struggle between the first movement’s depiction of voluptuousness and seduction and the redemption of the last movement symbolizing serenity and peace, with the granular drones containing fragments of spoken text softly woven into it.

Have I just persuaded myself to take it on again? Will the redemption of the last movement be enough to release me from the pain of heartbreak and leave me at peace with myself? Maybe yes, maybe no! But, as I mentioned in a previous blog, I value authenticity in both the writing and the performance of a piece of music, and this would most certainly be authentic, there being a good reason for this art to exist.

Would it stop me crying on a Sunday?

I’ll tell you what made me cry on Sunday the 13th of February: my father dying of complications from contracting Covid 19. He was 97 admittedly, but still in good shape and he had had his vaccinations, but complications set in, a minor stroke, taken into the ICU, and in the end, he was put into an induced coma and life support removed to allow him to go peacefully. This was in Minneapolis while I was here in Turin, but I was kept up to date by my younger brother and sister while they managed things there. He was absent for much of my childhood, but I can still thank him for the gift of music he passed on to us. He was a tenor, with that typically English sound and I still remember, as a young child, sitting under the table listening to him practicing arias from opera and Neapolitan songs…that was ‘the gift’.

So, from when he was taken ill until Sunday 13th and up until the following Friday of the funeral. I was pretty useless with a total lack of drive and enthusiasm for anything, and music was the furthest thing from my mind.

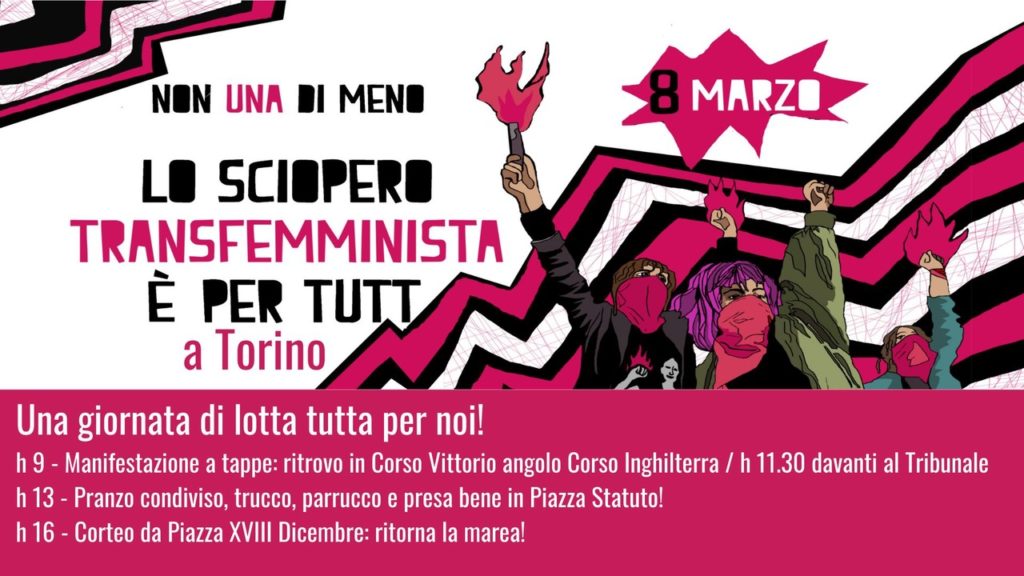

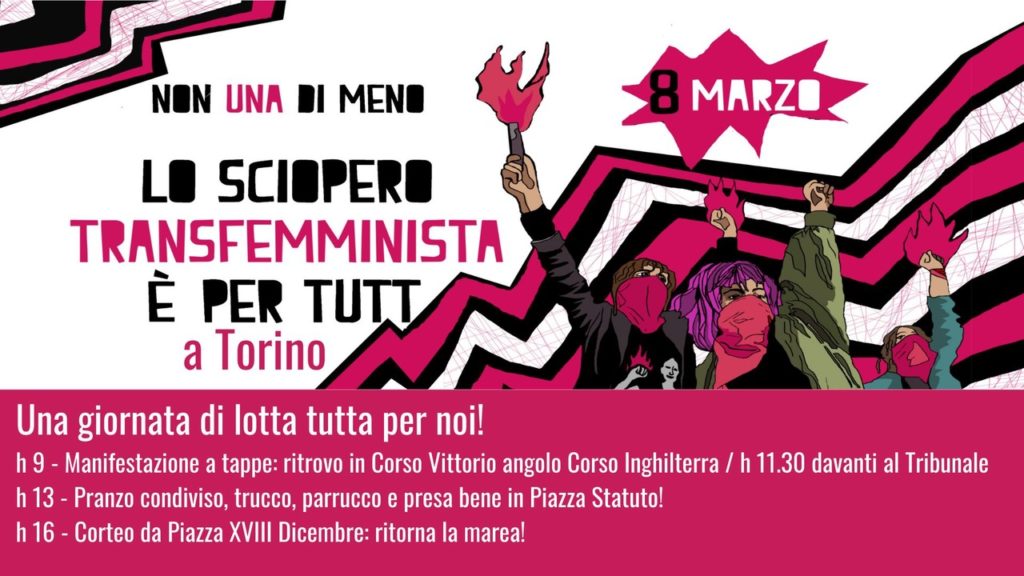

Did I cry last Sunday? No, actually! I didn’t have time; I was too busy with Non Una di Meno, Torino (NUDM originally formed by feminist groups in Chile and now found across the continents), preparing for International Women’s Day, or as I like to call it: ‘Move over patriarchy! You’ve been pretty useless for these last few thousand years so step aside and let us take over; we have both the intelligence and the empathy.’ In Italy we’re planning for a 24 hour national Strike and have been in touch with the Unions for their support; and we have a few grievances:

We deplore gender-based violence: Over 100 women murdered by men in 2021.

We also deplore the bias and inaction of the Italian judicial system which routinely pushes the blame for rape onto the victim. And in one case I personally know of, when a young woman went to the police to report violence at the hands of her then-boyfriend, she was told that it was not a crime since it had taken place within a relationship.

Women have been the first to lose work due to the pandemic and are not treated equally as are their male counterparts.

Health care for women who suffer from endometriosis, vulvodynia, etc…basically anything to do with a woman’s womb or genitalia is at least six months of waiting or, if you can afford it, you can pay for private health care.

If only we could persuade all stay-at-home women to strike on that day, that would certainly put the male world of work, and its master, Capitalism, under some strain, even if only for 24 hours. As Carla Lonzi, (Italian feminist) wrote in her ‘Manifesto di Rivolta Femminile’ of 1970:

“We recognize in the unpaid domestic work of women, the service which allows both state and private capitalism to exist.”

I keep this picture of a young woman of Non Una di Meno on my cellphone to remind me that there are young women willing to stand up and be counted, particularly for the women who don’t have a voice. We have many migrants from North Africa in all major cities of Italy and the women, in particular, need advocacy. One of our LGBTQIA+ groups held drop-in sessions for gay and lesbian refugees, who would have been tortured, imprisoned, or killed if sent back to their own country. I helped two Nigerian lesbian refugees prepare their evidence for the commission that would decide if they were given permission to stay based on refugee status; they were successful. As Covid restrictions ease these drop-ins might restart.

So I’ve been personal, confessional, and political, put more simply the last couple of weeks have been a bit meh!

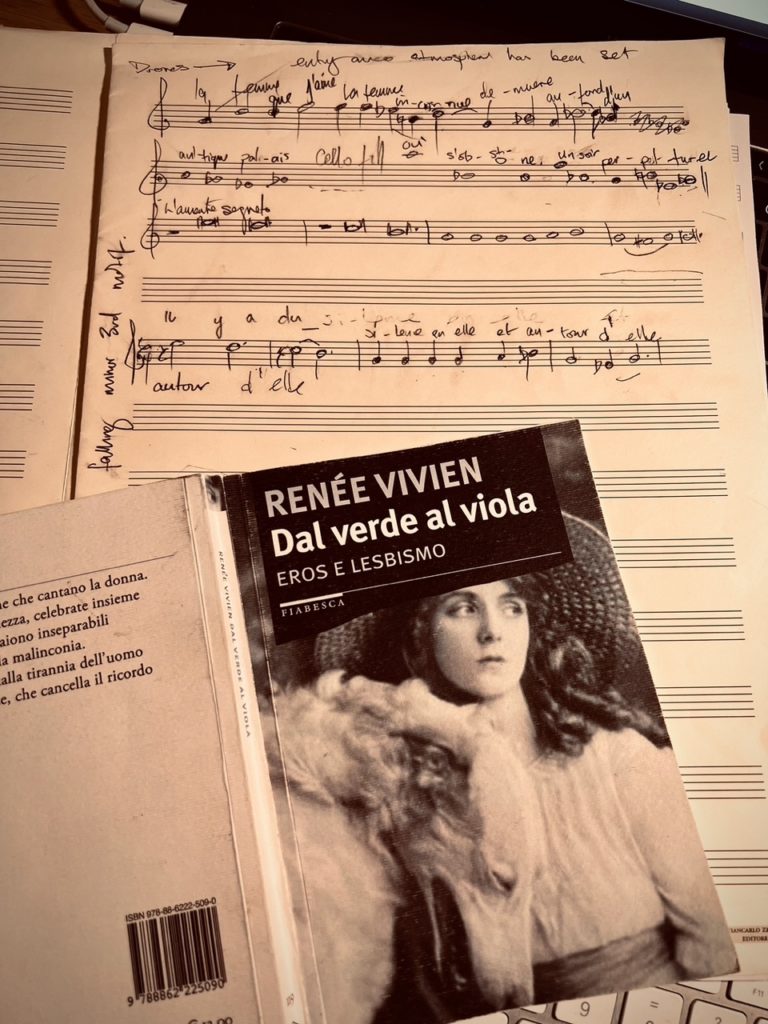

Thus, finally, I arrive at the music part of the blog: the first song of my song cycle.

This first song of a projected song cycle: settings of poems by lesbian writers starts with La divinité inconnue written by Renée Vivien who lived on the Left Bank in Paris, and at some point, in the same block as Collette. She was dubbed the new Sappho and translated some of her poems into French. I mentioned authenticity in an earlier blog and Renée’s poems are indeed authentic: they are dark, using lugubrious imagery, and many expressing her love for women and, in particular, her intense love affair with the American heiress, Natalie Barney. However, Natalie was very much against the idea of monogamous relationships: she desired that her lovers should be sincere with her, but only while her passion lasted. So, after a bit more than two years together, Renée broke off the relationship. It is thought that this breakup led to her early death at 32 through anorexia caused by alcohol and drug abuse. This is probably the most extreme example of the pain and hurt she felt from Natalie’s infidelity: I shall later be setting this in the original French as part of the Song Cycle, but for the time being, I shall begin with La divinité inconnue.

I hate she who I love, and I love she who I hate.

I would love to torture most skilfully the wounded limbs of she who I love,

I would like to drink the sighs of her pain and the lamentations of her agony,

I would slowly suffocate the breath from her breast,

I would wish that a merciless dagger pierce her to the heart,

And I would be happy to see the blood weeping, drop by drop from her veins.

I would love to see her death on the bed of our caresses…

I love she who I hate.

When I spy her in a crowd, inside of me I feel an incurable burning desire to hold her tight in front of everyone and to possess her in the light of day.

The words of bitterness on my lips change to become sweet sighs of desire.

I push her away with all my anger, and yet I call to her with all my sensuality.

She is both ruthless and cowardly, and yet her body is passionate and fresh – a flame dissolving in the dew

I cannot look, without feeling breathless and without regrets, at the perfidy in her stare or the falsehood on her lips…..

I hate she who I love, and I love she who I hate.

‘Translation of a Polish Song’ Renée Vivien (1903)

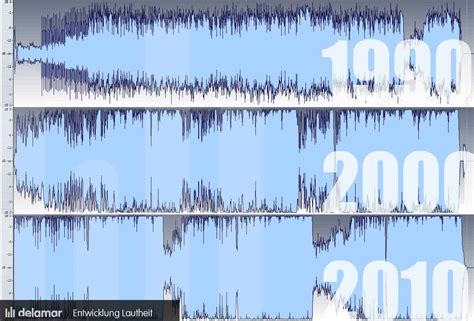

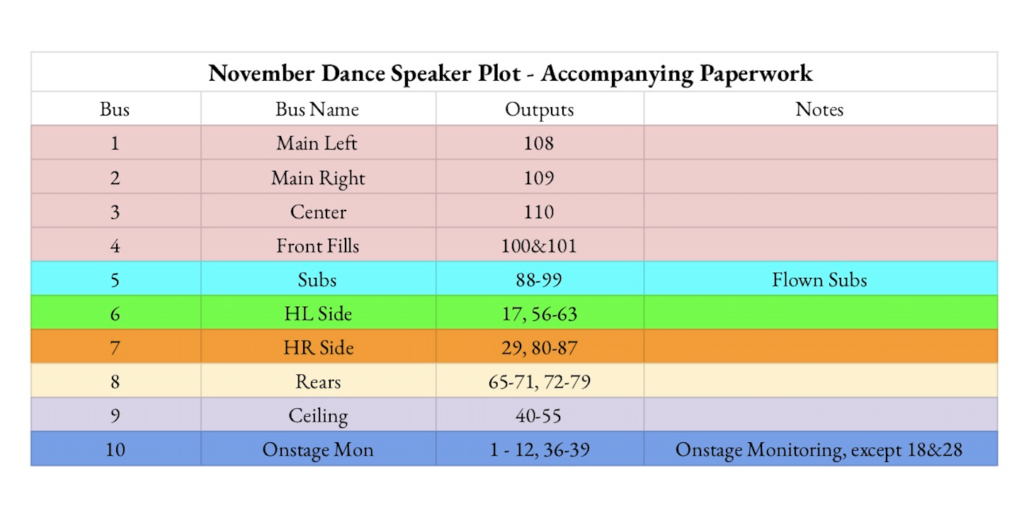

So that’s the poet. What about the music and the setting? I’ve projected the song for Soprano: light and angelic against the darkness of the text and the instrumentation, which is cello, B flat clarinet, Harp, and electronics. I’m aiming to keep the instrumentation light and translucent so that there are very few tutti passages, while those that are will be sparse. The final mixing will be key. Practical considerations are: find a soprano who can sing in French. For the instruments, for the time being, I will use orchestral samples from Spitfire Audio or the UVI IRCAM Solo Instruments 2, which has quite a few innovative instrumental techniques but obviously, live voice and instruments with live electronics for performance versions.

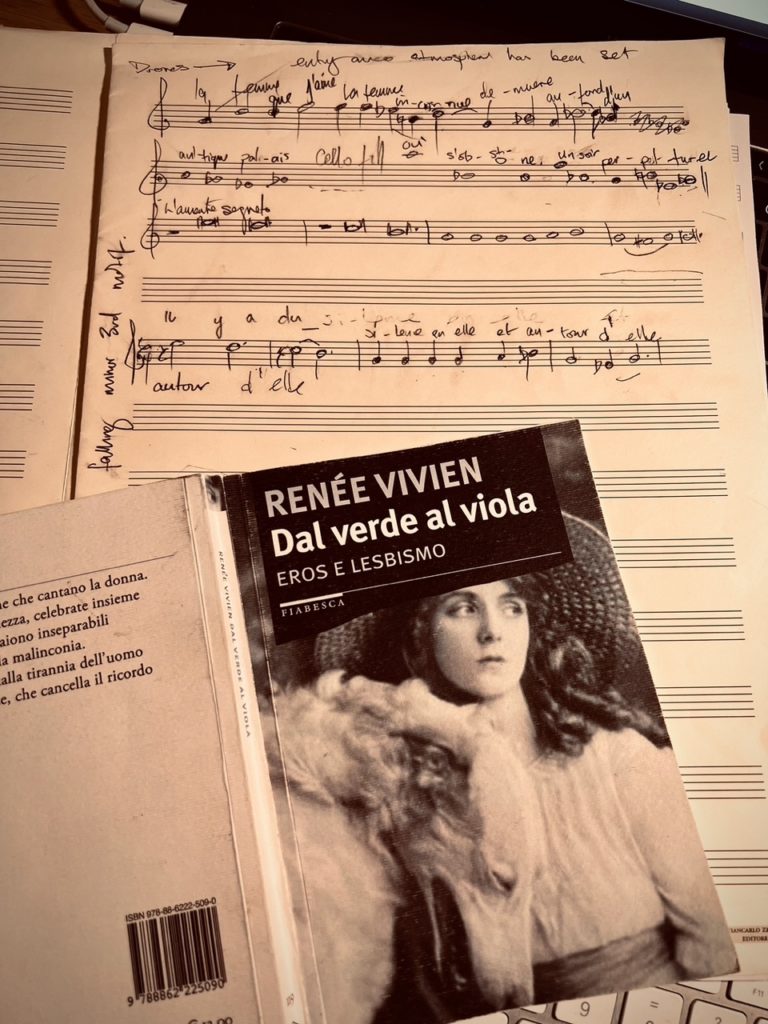

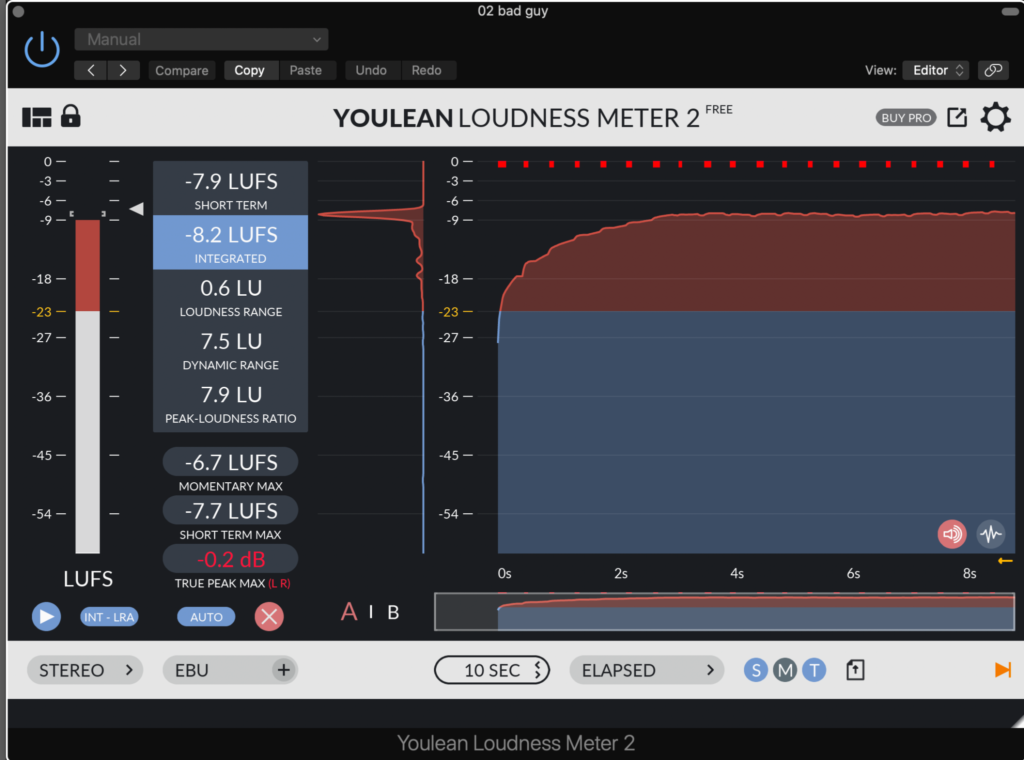

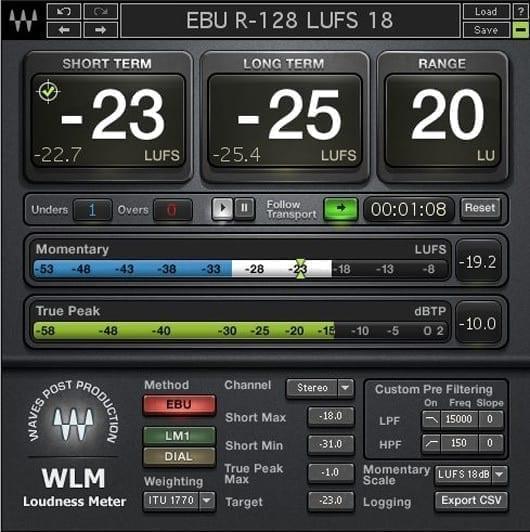

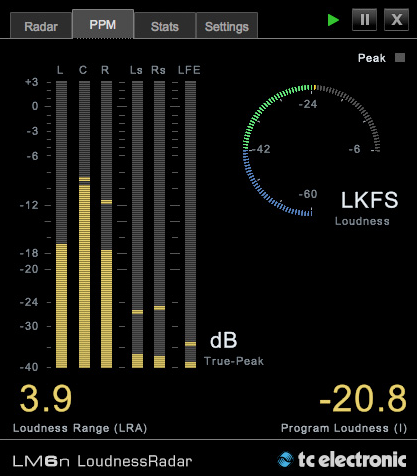

What am I using to create the music? Keyboard and pencil for setting the words and melodies, and here I need to collaborate with the soprano since I’d like to build in some space for improvisation. For the instruments, I’m using midi inputs for the instruments: these are going into Logic Pro – the generous 90-day trial gives me time to get to know the ins and outs. I’m still tempted to put together the individual clips, loops, drones, etc. in Audition since I find it easy to manipulate them and balance and pan the individual tracks. Final mixing, however, is not so good since Audition doesn’t seem to have faders like Logic Pro or Reaper. So, this is telling me to record the midi onto Logic Pro and tweak as necessary, then bounce the audio tracks to Audition, though I still have to learn to do that. However, if I make the effort and become more familiar with Logic Pro before the trial is up then this maybe ‘the one’, especially since it has enough built-in sounds, instruments, and loops to keep me amused.

One more bit of software, MAX MSP. Now I have a love/hate relationship with software, and this is one that I could really hate since it requires a kind of proto programming. “Ha!” I hear those of you in the know and slick with Super Collider say. My point is that I’m an artist, not a scientist or mathematician, though I can take an interest when I fancy. No, if I were a violinist, I would no more consider building my own Stradivarius than I would programming music. So, for that reason, I’m using the BEAP modules in MAX which are like my using the EMS 100 analog synthesizer in the late 70s. I patch one module into another to control frequencies, modulations, envelope shapes, etc. I may occasionally write in a small piece that will change my patches, but one step at a time. Right at this moment, I am experimenting with various modules which allow me to treat and shape synthesized sounds as well as recorded sounds. In the last few years, I have created thousands of clips while processing recorded sounds which I have used to create loops and drones, for example. Therefore, I can take various individual stages of these sounds and repurpose them into something new; run them through a granulator, spectral filter, sampler, sequencer or random note generator. I can treat them in so many ways and mix them with other sounds by multitracking; all I have to do is find them. This is my ‘experimental’!

So far, I have been experimenting with two sine wave oscillators to create some simple frequency modulation, then adding a third, then using Low-Frequency oscillators to control the oscillation of the sound-producing oscillators. And then adding a soft underlay of a recording of an espresso machine at work put through a granulator and controlled with another LF oscillator which causes it to sample the clip at various points and produce a kaleidoscope of sounds.

How’s it going so far? Not very far… For the reasons spoken about earlier, there are other things that have occupied my ADHD-addled brain and together added a bit of confusion and depression. But we’re coming out of that now and desperate to get composing again. Besides, I need to take something to the Prof on March 1st. So having finished this, I’ll add something to the soprano melody while still experimenting with the electronics.

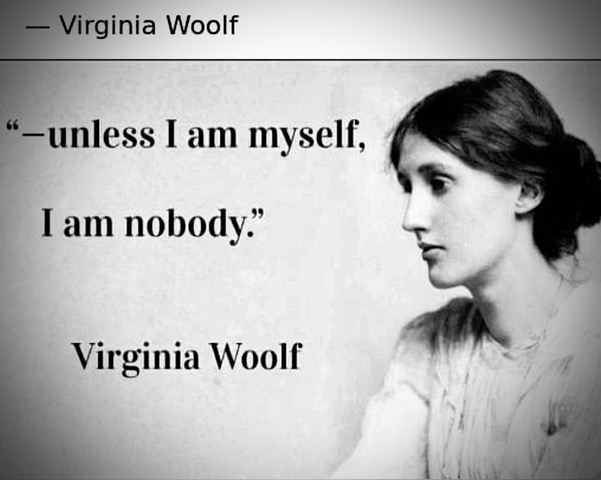

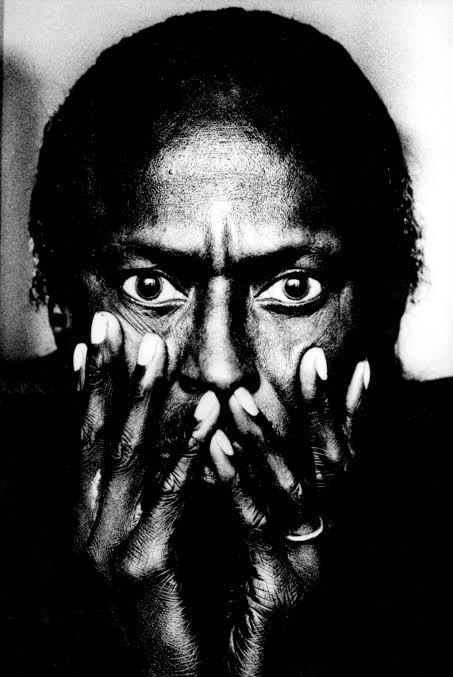

I listen a lot because music is the same as me; and if I am not myself, I am nobody. This is one song that has inspired me for its beauty and its authenticity. It is magical from the start. Then at 59” something almost ecstatic happens… this is the kind of inspiration that will lead me through my piece, plus of course, Renée’s poetry and the sadness of her life, which kind of chimes with me, but now I only cry on a Sunday.

Love from Frà in Torino

She lost her voice That’s how we knew. Music by Frances White, sung by Soprano Kristin Norderval, libretto by Valeria Vasilevski

https://open.spotify.com/track/6CnP4XJbPYB9CHFYPq9bFT?si=8cd81879067d47f6