Gain Without the Pain

Gain Structure for Live Sound Part 1

Gain structure and gain staging are terms that get thrown about a lot, but often get skimmed over as being obvious, without ever being fully explained. The way some people talk about it, and mock other people for theirs, you’d think proper gain structure was some special secret skill, known only to the most talented engineers. It’s actually pretty straightforward, but knowing how to do it well will save you a lot of headaches down the line. All it really is is setting your channels’ gain levels high enough that you get plenty of signal to work with, without risking distortion. It often gets discussed in studio circles, because it’s incredibly important to the tone and quality of a recording, but we have other things to consider on top of that in a live setting.

So, what exactly is gain?

It seems like the most basic question in sound, but the term is often misunderstood. Gain is not simply the same as volume. It’s a term that comes from electronics, which refers to the increase in amplitude of an incoming signal when you apply electricity to it. In our case, it’s how much we change our input’s amplitude by turning the gain knob. In analogue desks, that means engaging more circuits in the preamp to increase the gain as you turn (have you ever used an old desk where you needed just a bit more level, so you slowly and smoothly turned the gain knob, it made barely any difference… nothing… nothing… then suddenly it was much louder? It was probably because it crossed the threshold to the next circuit being engaged).

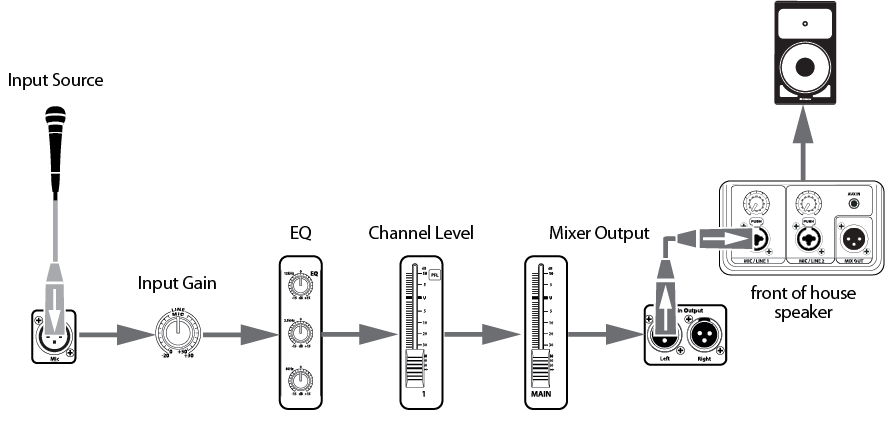

Digital desks do something similar but using digital signal processing. It is often called trim instead of gain, especially if no actual preamp is involved. For example, many desks won’t show you a gain knob if you plug something into a local input on the back of it, because its only preamps are in its stagebox. You will see a knob labelled trim instead (I do know these knobs are technically rotary encoders because they don’t have a defined end point, but they are commonly referred to as knobs. Please don’t email in). Trim can also be used to refer to finer adjustments in the input’s signal level, but as a rule of thumb, it’s pretty much the same as gain. Gain is measured as the difference between the signal level when it arrives at the desk to when it leaves the preamp at the top of the channel strip, so it makes sense that it’s measured in decibels (dB), which is a measurement of ratios.

The volume of the channel’s signal once it’s gone through the rest of the channel strip and any outboard is controlled by the fader. You can think of the gain knob as controlling input, and the fader as controlling output (let’s ignore desks with a gain on fader feature. They make it easier for the user to visualise the gain but the work is still being done at the top of the channel strip).

Now, how do you structure it?

For studio recording, the main concern is getting a good amount of signal over the noise floor of all the equipment being used in the signal chain. Unless you’re purposefully going for a lo-fi, old-school sound, you don’t want a lot of background hiss all over your tracks. A nice big signal-to-noise ratio, without distortion, is the goal. In live settings, we can view other instruments or stray noises in the room as part of that noise floor, and we also have to avoid feedback at the other end of the scale. There are two main approaches to setting gains:

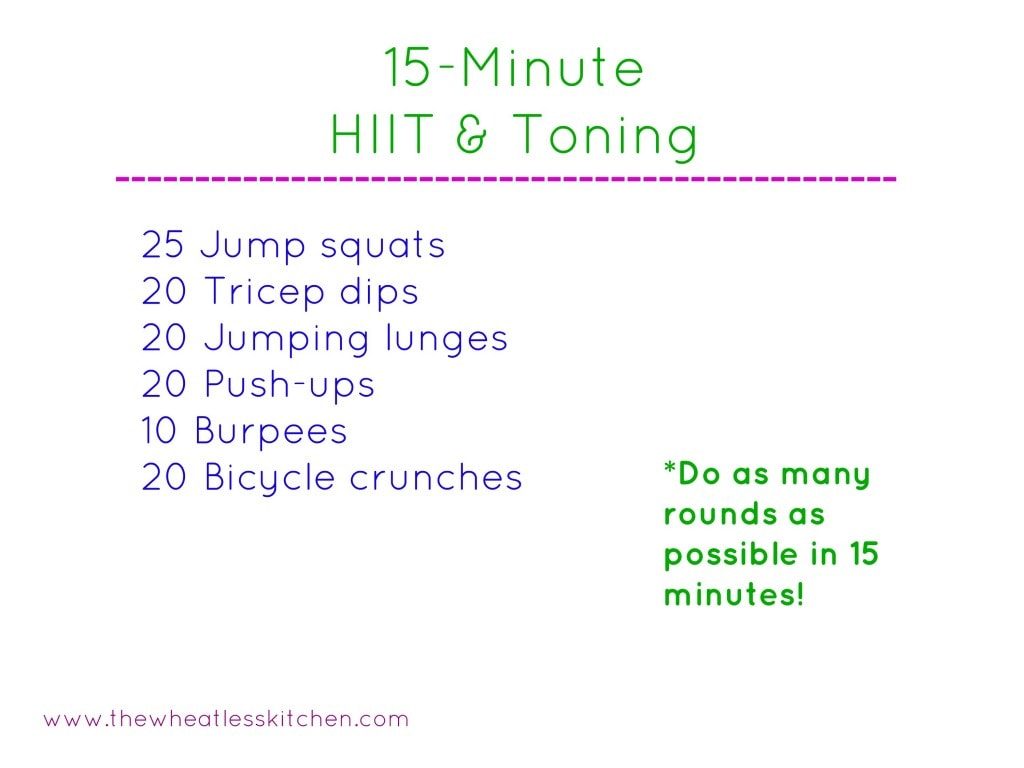

Gain first: With the fader all the way down, you dial the gain in until it’s tickling the yellow or orange LEDs on your channel or PFL while the signal is at its loudest, but not quite going into the red or ‘peak’ LEDs (of course, if it’s hitting the red without any gain, you can stick a pad in. You might find a switch on the microphone, instrument or DI box, and the desk. If the mic is being overwhelmed by the sound source it’s best to use its internal pad if it has one, so it can handle it better and deliver a distortion-free signal to the desk). You then bring the fader up until the channel is at the required level. This method gives you a nice, strong signal. It also gives that to anyone sharing the preamps with you, for example, monitors sharing the stagebox or multitrack recording. However, because faders are measured in dBs, which are logarithmic, it can cause some issues. If you look at a fader strip, you’ll see the numbers get closer together the further down they go. So if you have a channel where the fader is near the bottom, and you want to change the volume by 1dB, you’d have to move it about a millimetre. Anything other than a tiny change could make the channel blaringly loud, or so quiet it gets lost in the mix.

Fader at 0: You set all your faders at 0 (or ‘unity’), then bring the gain up to the desired level. This gives you more control over those small volume changes, while still leaving you headroom at the top of the fader’s travel. It’s easier to see if a fader has been knocked or to know where to return a fader to after boosting for a solo, for example. However, it can leave anyone sharing gains with weak or uneven signals. If you’re working with an act you are unfamiliar with, or one that is particularly dynamic, having the faders at zero might not leave you enough headroom for quieter sections, forcing you to have to increase the gain mid-show. This is far from ideal, especially if you are running monitors, because you’re changing everyone’s mix without being able to hear those changes in real-time, and increasing the gain increases the likelihood of feedback. In these cases, it might be beneficial to set all your faders at -5, for example, just in case.

In researching this blog, I found some people set their faders as a visual representation of their mix levels, then adjust their gains accordingly. It isn’t a technique I’ve seen in real life, but if you know the act well and it makes sense to your workflow, it could be worth trying. Once you’ve set your gates, compressors, EQ, and effects, and added the volume of all the channels together you’ll probably need to go back to adjust your gains or faders again, but these approaches will get you in the right ballpark very quickly.

All these methods have their pros and cons, and you may want to choose between them for different situations. I learned sound using the first method, but I now prefer the second method, especially for monitors. It’s clear where all the faders should sit even though the sends to auxes might be completely different, and change song to song. Despite what some people might say, there is no gospel for gain structure that must be followed. In part 2 I’ll discuss a few approaches for different situations, and how to get the best signal-to-noise ratio in those circumstances. Gain structure isn’t some esoteric mystery, but it is important to get right. If you know the underlying concepts you can make informed decisions to get the best out of each channel, which is the foundation for every great mix.

- Further reading/watching:

- https://www.soundonsound.com/

sound-advice/q-how-should-i- optimise-my-gain-structure - https://mikeriversaudio.files.

wordpress.com/2010/10/ gainstructure.pdf - https://www.youtube.com/watch?

v=IJVdBTfpUEQ&t=592s - https://www.youtube.com/watch?

v=1hwT15SSwgU&t=0 - https://www.youtube.com/watch?

v=SPXKFjrgDr0&t=0