During these last months of 2020, I started a master’s degree that has pleasantly surprised me, and although it seems to be unrelated to my professional facet of audio, studying “cultural management” has led me to know a new and exciting world, which has more related to my interests than it seems.

But why do I want to talk about acoustemology and cultural management when I have been in sound engineering for almost 14 years focusing only on the technical aspects? In some reading, I found that term, acoustemology. At the time I did not know but that due to its etymological roots caught my attention.

Already with ethnomusicology and some branches of anthropology in conjunction with acoustics, studies of music, ecological acoustics and soundscapes have been carried out, helping to interpret sound waves as representations of collective relationships and social structures, such is the case of sound maps of different cities and countries, which reflect information on indigenous languages, music, urban areas, forest areas, etc., some examples:

Mexican sounds through time and space: https://mapasonoro.cultura.gob.mx/

Sound Map of the Indigenous Languages of Peru: https://play.google.com/store/apps/details?id=com.mc.mapasonoro&hl=en_US&gl=US

Meeting point for the rest of the sound maps of the Spanish territory: https://www.mapasonoro.es/

The life that was, the one that is and the one that is collectively remembered in the Sound Map of Uruguay: http://www.mapasonoro.uy/

As Carlos de Hita says, our cultural development has been accompanied by soundscapes or soundtracks that include the voices of animals, the sound of wind, water, reverberation, temperature, echo, and distance.

But it is with the term acoustemology, which emerged in 1992 with Steven Feld, where the ideas of a soundscape that is perceived and interpreted by those who resonate with their bodies and lives in a social space and time converge. An attempt is made to argue an epistemological theory of how sound and sound experiences shape the different ways of being and knowing the world, and of our cultural realities.

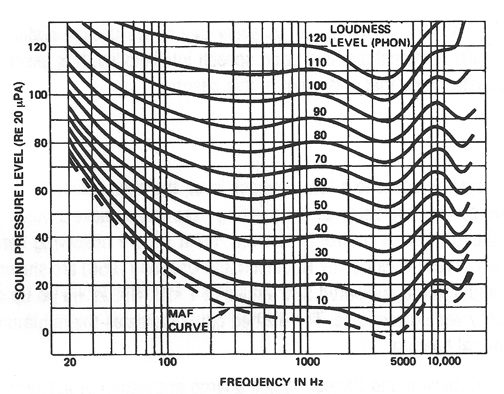

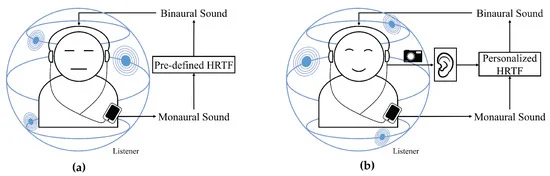

But then another concept comes into play, perception. Perception is mediated by culture: the way we see, smell, or hear is not a free determination but rather the product of various factors that condition it (Polti 2014). Perception is what really determines the success of our work as audio professionals, so I would like to take a moment with this post to think over the following ideas and invite you to do it with me.

As professionals dedicated to the sound world, do we stop to think about the impact of our work on the cultures in which we are immersed? Do we worry about taking into account the culture in which we are immersed when doing an event? Or do we only develop our work in compliance with economic and technological guidelines instead of cultural ones?

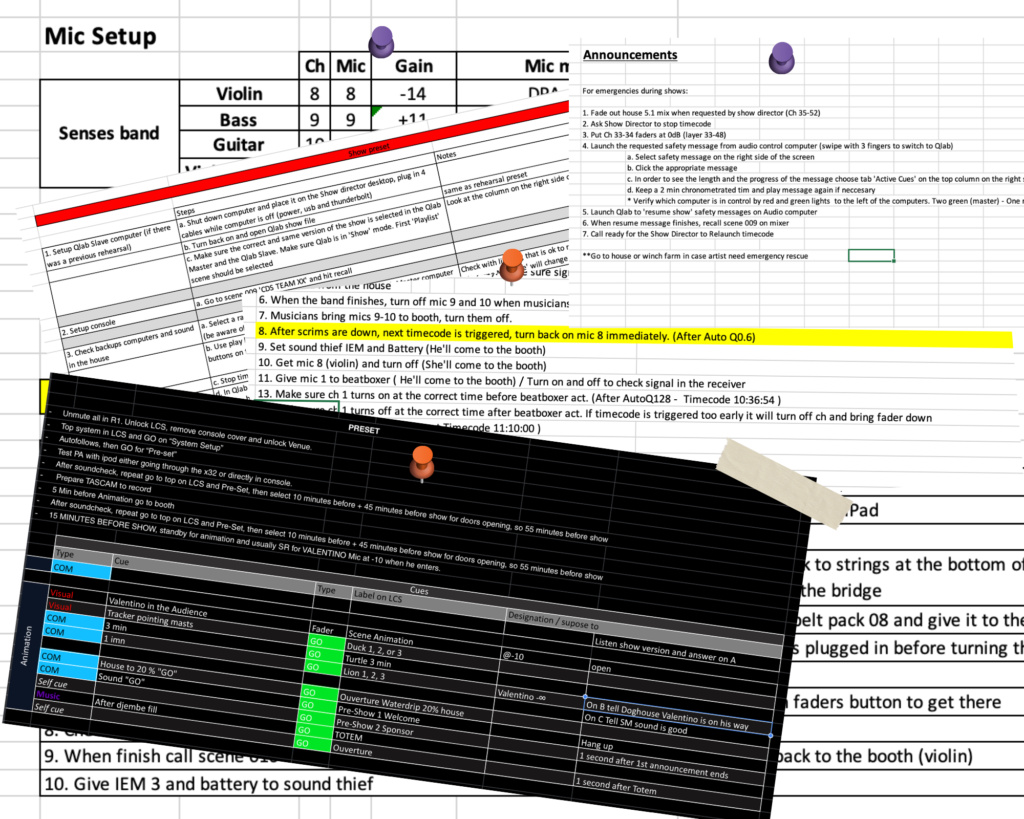

When we plan an event, do we use what is really needed, do we have a limit or to attend to our ego we use everything that manufacturers sell us without stopping to think about the impact (economic, social, and environmental) that this planning has in the place where these events will be taking place? Do we really care about what we want to transmit or do we only care about making the audio sound as loud as possible or even louder? Do we stop to think what kind of amplification an event really requires or do we just want to put a lot of microphones, a lot of speakers, if it’s immersive sound the better, make it sound loud, and good luck if you understand? Do we care about what the audience really wants to hear? Are we aware of noise pollution or do we just want the concert to be so loud that people can’t even hear their own thoughts?

Are we conscious of making recordings that reflect and preserve our own culture and that of the performer, or do we only care about obtaining awards at all costs? Have we already shared all the knowledge we have about audio or are we still competing to show that we know everything, that I am technically the best? Or is it time to humanize and put our practice as audio professionals in a cultural context?

I remember an anecdote from a colleague, where he told how after doing all the set up for a concert in a Mexican city, of which I do not remember the details, it was only after the blessing of the shamans and the approval of the gods that the event was possible.

Our work as audio professionals should be focused on dedicating ourselves to telling stories in more than acoustic terms, telling stories that bear witness to our sociocultural context and who we are.

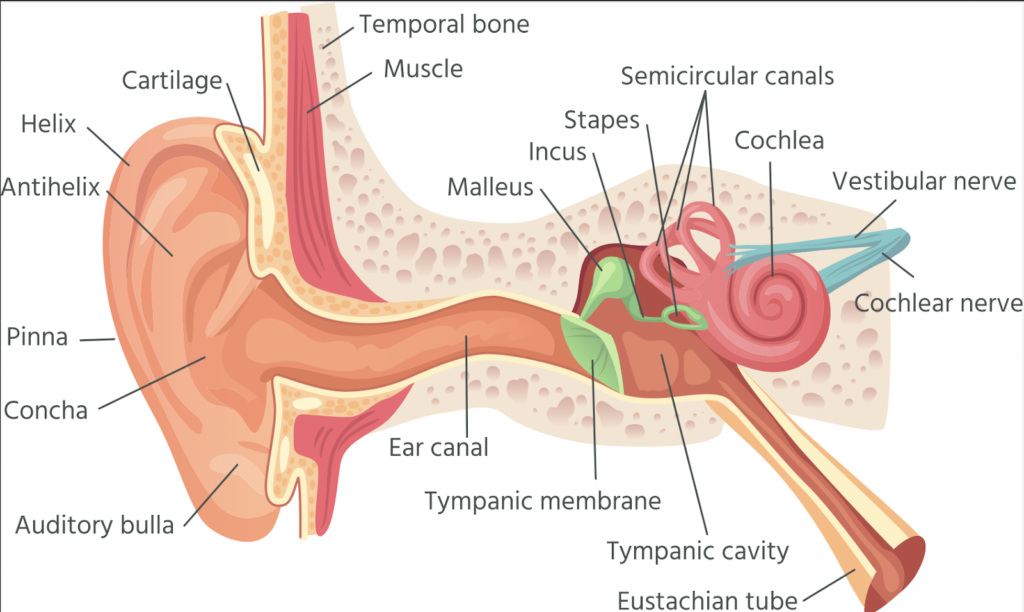

“Beyond any consideration of an acoustic and/or physiological order, the ear belongs to a great extent to culture, it is above all a cultural organ” (García 2007

References:

Bull, Michael; Plan, Les. 2003. The Auditory culture reader. Oxford: Berg. New York.

De Hita, Carlos. 2020. Sound diary of a naturalist. We Learn Together BBVA. Spain Available at https://www.youtube.com/watch?v=RdFHyCPtrNE&list=WL&index=14

García, Miguel A. 2007. “The ears of the anthropologist. Pilagá music in the narratives of Enrique Palavecino and Alfred Metraux ”, Runa, 27: 49-68 and (2012) Ethnographies of the encounter. Knowledge and stories about other music. Anthropology Series. Buenos Aires: Ed. Del Sol.

Rice, Timothy. 2003. “Time, Place, and Metaphor in Music Experience and Ethnography.” Ethnomusicology 47 (2): 151-179.

Macchiarella, Ignazio. 2014. “Exploring micro-worlds of music meanings”. The thinking ear 2 (1). Available at http://ppct.caicyt.gov.ar/index.php/oidopensante.

Victoria Polti. 2014. Acustemología y reflexividad: aportes para un debate teórico-metodológico en etnomusicología. XI Congreso iaspmal • música y territorialidades: los sonidos de los lugares y sus contextos socioculturales. Brazil

Andrea Arenas is a sound engineer and her first approach to music was through percussion. She graduated with a degree in electronic engineering and has been dedicated to audio since 2006. More about Andrea on her website https://www.andreaarenas.com/

Andrea Arenas is a sound engineer and her first approach to music was through percussion. She graduated with a degree in electronic engineering and has been dedicated to audio since 2006. More about Andrea on her website https://www.andreaarenas.com/