AI in Audio and Music: Dazzling Development or Threat to Creativity?

Artificial Intelligence, or AI, has been a hot topic for some time in the news, on social media, and around the physical and virtual water coolers. Numerous content creator accounts dedicated to AI-generated art and videos have sprung up, featuring spectacular creations from the beautiful to the bizarre, and amusing mash-ups (one of my favourites being a techno version of Harry Potter set in Berlin). You can now hear long-gone artists such as Freddie Mercury sing new songs that sound eerily realistic, or generate a whole album’s worth of lyrics, melodies, harmonies and even entire songs in the genre of your choice using prompts and suggestions.

Is this impressive advancement a tool to be celebrated and embraced? Or should we fear what AI is capable of creating, and that it will do a superior job than humans and make certain jobs and careers obsolete? There are many complicated moral questions attached to the use of AI, stemming from the multitude of fascinating ways that AI is being used in audio and music.

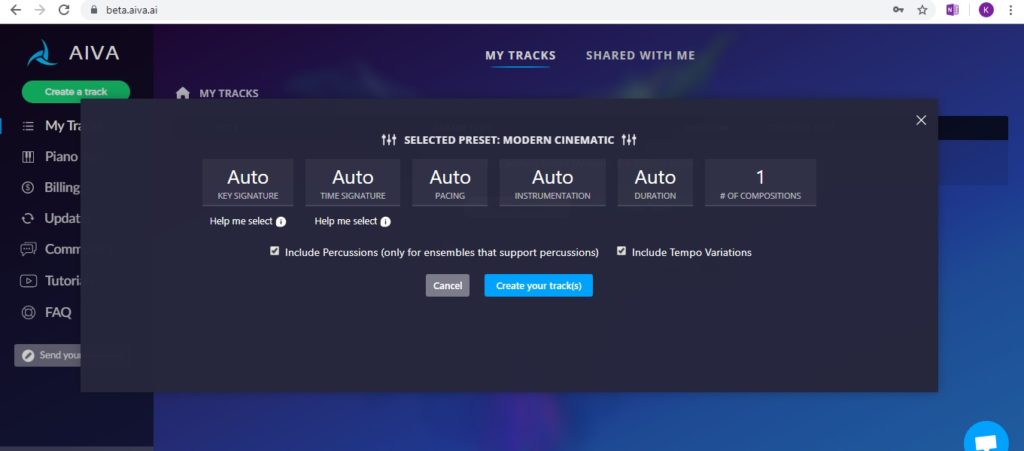

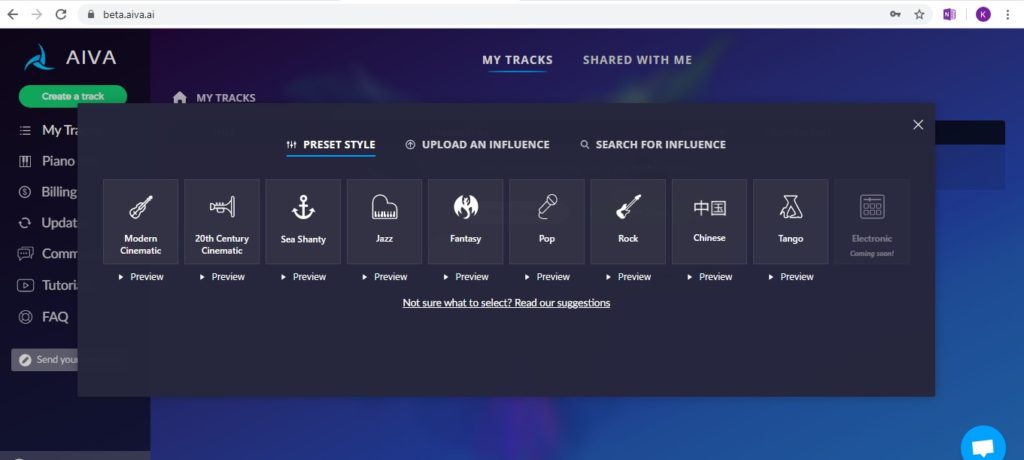

Generative AI

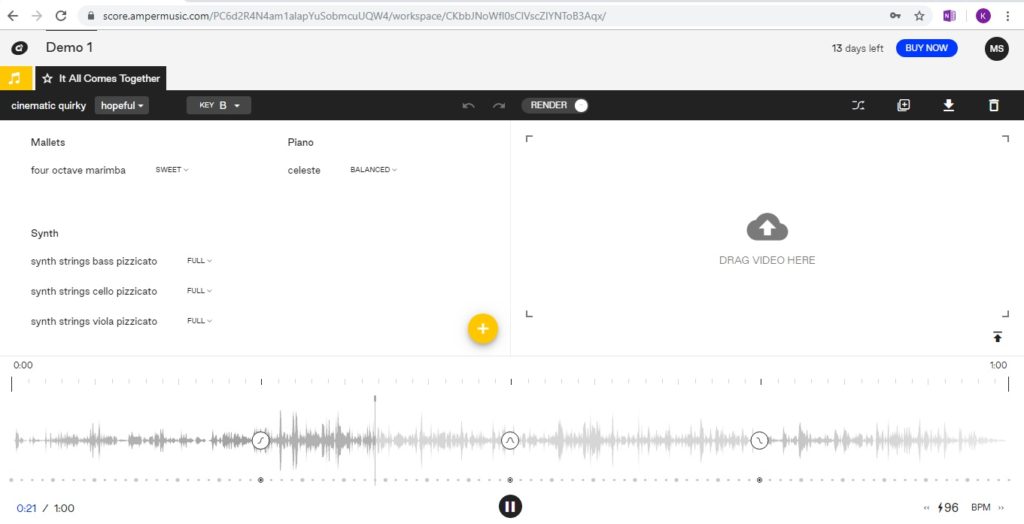

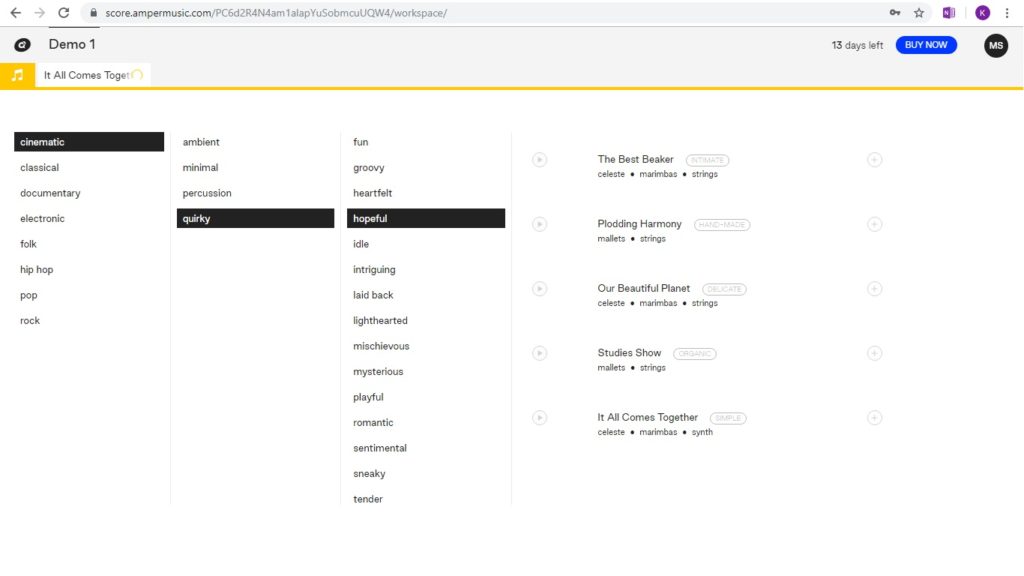

Generative AI is a digital tool that can generate audio content using input from text prompts, based on what it learns from existing audio media. Some productive ways it can be used for include sound design (think of the possibilities for unworldly sci-fi sounds!), voice synthesis and text-to-speech creation for narrated entertainment, information services and accessibility aids (a huge benefit for people with speech, vision or mobility difficulties), and music creation to help take songwriters’ and composers’ musical visions to the next level, or allow novice music lovers to explore music creation.

Audio tools for efficiency

There are many AI tools that can make our work as sound engineers more efficient. Certain AI tools can help audio engineers to streamline their workflows and automate file creation and management. AI algorithms can be used to great effect in audio restoration, to separate unwanted audio signals. A wonderful example is AI being used to extract John Lennon’s vocals from a lo-fi ‘70s recording (by the remaining Beatles members themselves) to complete and release a new Beatles song, “Now and Then”.

AI mixing and mastering tools can analyse a track and give suggested improvements or a useful starting point. Given music-making’s democratisation and the fact that anyone can release a track into the world and may not necessarily have the means to employ an expert, an AI plugin might be the next best option. It may also function as a training tool, to help novice sound engineers compare different mix possibilities and fine-tune their listening skills.

Technical disadvantages

While AI is learning and improving at an impressive (and perhaps alarming) rate, it is still far from perfect. Songs generated by AI often have odd or awkward-sounding moments, whether it’s lyrics with weird grammar, or a glitchy transition. Synthesised voices can sound unnatural and robotic. Poor audio quality and inconsistencies make it inevitable that – in our current times – a song recorded and mixed by a human sound engineer will sound superior.

Moral concerns

The cloning and mimicry of famous voices (commonly known as “deepfake” technology) and songwriting styles can be used to twist someone’s words, create misinformation, or “steal” someone’s musical style and generate new content. The question of who owns and retains copyright of content generated by an AI tool, and if it’s fair to generate new music based on someone’s music without compensating them, is a complex one. This is an issue also widely debated in the visual art world, with companies such as OpenAI being sued by various groups of artists. It’s likely that new laws surrounding AI and intellectual property may need to be passed in the future.

Lastly, the possibility of AI taking the place of humans in various creative or technical jobs does seem a potential threat, as AI improves day by day. As we navigate these astounding technological advancements, hopefully we’ll find the best way forward to use AI for positive productivity, efficiency and creativity, and not for malicious intent.

What does AI say about it all?

Perhaps ChatGPT’s own response, when asked what AI means for the music and audio industries, best sums it up:

“Overall, AI has the potential to streamline various processes in the audio and music industries, fostering innovation, efficiency, and new creative possibilities. However, it also raises questions about copyright, authenticity, and the role of human creativity in the artistic process.”

(P.S. The hilarious image accompanying this blog post was AI generated using Deep Dream Generator. The text was not and was written by me, a bonafide human!)