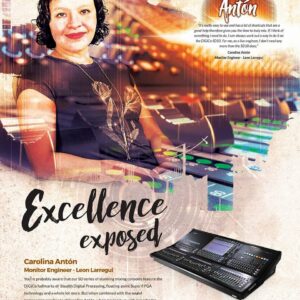

Love of Learning – Carolina Anton – Sound Engineer

Carolina Anton is a freelance sound engineer based in Mexico City. She works as a FOH and Monitor Engineer and specializes in sound design and optimization. She works with several sound companies such as 2hands production, Eighth Day Sound, Britannia Row, among others. She has done international tours with artists Zoé, Natalia Lafourcade, Leon Larregui, Mon Laferte. Carolina is also the owner of GoroGoro Studio – an audiovisual studio for immersive sound mixing experiences. She is the representative in Mexico of ISSP Immersive Sound software for live shows, a partner in 3BH an Integrative company that specializes in architectural, acoustic, and audiovisual technological design. In her spare time, she is the head of the SoundGirls Mexico City Chapter.

Lifelong Love for Learning

Carolina grew up with a Montessori education that instills a lifelong love of learning and has provided Carolina with a solid foundation and base in her work and life. During her high-school years, she was enrolled at the Monterrey Institute of Technology and Higher Education which gave her a solid understanding of engineering and the use of technology. At the same time, she became interested in Japanese culture and started to study the Japanese Tea Ceremony. At age 19, she started to study music and took a percussion diploma at Berklee College of Music and then went on to study with teachers from Escuela Superior de Música in Mexico City. She started playing drums at pubs and restaurants and formed her own band, soon after that she was invited to play drums for some artists as Laura Vazquez (ex- keyboardist of Fito Paez)

The Spark

In 2002, she received a scholarship to continue her Japanese studies at Urasenke Gakuen Professional College of Chado, Midorikai in Kyoto, Japan. In 2004, she graduated and returned to Mexico City. Upon returning, she found that she had no one to play music with and decided to find a job in music, so she could play drums and also thought she could work in audio. Carolina remembers saying to herself “it looks very easy pushing all those buttons and moving faders! And I love music… but actually had no idea what I was getting into, from that day I started this wonderful but complicated path.”

At the time there were limited professional audio programs in Mexico City, so she started taking every course she could find and says she still is constantly keeping updated on training and certifications. With her love of learning she started buying all the books on audio she could find and started to study on her own. She says it took her over four years to find someone that would help her get her foot in the door. She says “all rental companies told me that as a woman I had no future in this… a woman cannot carry cases or cables! Work at night? Travel with men? This work is impossible for a woman like you!!… They said. After almost three years I finally found one company that would support me and help me forge my own path from below. At that point, I swore that nobody was going to slow me down.”

While Carolina saw herself working in a recording studio, this would not be as the company that gave her a shot was a live sound company and she decided to “just let it flow and along the way, I realized that I really like this job so I started talking to all audio engineers close to me, without realizing that I was fully involved and made to work in live sound. I knew then that I like challenges and excitement, plus I work better under pressure.”

Career Start

She started as PA Tech and worked her way up to FOH and Monitors and now mainly freelances with 2Hands Production Services and Eighth Day Sound. Carolina’s first national tour was as a system engineer for Zoé Unplugged in 2011. Her first contact with international artists was with Earth, Wind & Fire where she approached with Eighth Day Sound on the same year with whom since then she has worked on tours and festivals such as Noel Gallager High Flying Birds, Cage The Elephant, The Cranberries, Faithless, and Electric Forest Festival, among others.

The first time Carolina got to mix was unexpected, “I remember that my job was only doing the PA design and tune it, but the musicians were late and I have to pre-prepare the scene of a venue SC48 and I didn’t know very well how to set up (I am very good with the mixers so it didn’t take me so much to understand it) after I finished setting up the mixer, Gloria´s staff ask me to check the monitors and PA (so I send some pink noise and test the mics), I was so relaxed and did that thinking that in some point the main engineer will come, unconsciously I began to place filters and make a pre-mix (good for me!) When the musicians arrived and I didn’t see any staff with them, I asked for the engineer and with all the calm in the world, they told me… “You are!” At that moment I got very nervous but luckily I had prepared everything correctly so the show flowed perfectly. Definitely in this profession, we must be prepared for everything.”

Carolina has toured as a Monitor Engineer for Gloria Gaynor, Kool & the Gang Mexico 2012, Janelle Monáe Mexico 2012, Vetusta Morla, Natalia Lafourcade, Leon Larregui, Mon Laferte and before the pandemic hit she was on tour with MexFutura. She has run FOH of Café Tacvba & Zoe, Everyone Orchestra, Madame Gandhi, Hellow Festival, PalNorte Festival, Electric Forest Festival and BPM Festival. She has been a system engineer for Marc Anthony Mexico 2012, Empire of the Sun “Walking on a Dream” Tour Mexico City 2011, Bunbury “Licenciado Cantinas” Tour Mexico 2012, ZOÉ & Café Tacvba Touring for 5 years (2011 – 2016), MTV Unplugged Miguel Bosé, Enrique Bunbury, Pepé Aguilar, Zoé, Kinky, and 90`s Pop Tour 360º – 2019.

Her credits also include Recording sound engineer and/or Assistant sound Engineer Caifanes, MTV Unplugged Pepé Aguilar, Viva Tour: En vivo – Thalia and Production of the Live Streaming for the Vive Latino festival 2015.

Career Today

In 2015, she was invited to become a partner in 3BH, an integrative company that specializes in architectural, acoustic and audiovisual technological design. Working with post-production and music studios in Mexico and LATAM. The engineers at 3BH work to integrate projects at the highest level from construction, electricity, insulation and acoustic conditioning, monitoring design and calibration in ST, 5.1, 7.1, ATMOS formats, signal design and work with the highest technology. She also is the owner of GoroGoro Studio audiovisual studio specialized in immersive sound mixing experiences and traditional formats. The most recent material is a video with immense sound by the band MexFutura, presented on AppleMusic.

In 2015, she was invited to become a partner in 3BH, an integrative company that specializes in architectural, acoustic and audiovisual technological design. Working with post-production and music studios in Mexico and LATAM. The engineers at 3BH work to integrate projects at the highest level from construction, electricity, insulation and acoustic conditioning, monitoring design and calibration in ST, 5.1, 7.1, ATMOS formats, signal design and work with the highest technology. She also is the owner of GoroGoro Studio audiovisual studio specialized in immersive sound mixing experiences and traditional formats. The most recent material is a video with immense sound by the band MexFutura, presented on AppleMusic.

Never Stop Learning

She has certifications in Shure Advanced RF Coordinator for Axient Digital Systems,

AVID Protools, Meyer Sound – Sound System Design of Meyer Sound & SIM 3 Training and System Design, SMAART Software Applications & Procedures Training and System Design, Martin Audio Professional Loudspeaker Systems & MLA Certified Operator Training Program, L-Acoustics System Fundamentals, Audinate DANTE certified levels 1 – 3 and SSL Live console, Yamaha, Digico & MIDAS.

Carolina’s long-term goals are to keep touring and learning. “A long term goal that I have managed to achieve was mixing in immersive sound, I have been specializing in this area for several years and I find it very fun and interesting… I think it is the future in various areas of sound.”

What do you like best about touring?

I love to travel, meeting people, having the opportunity to use different gear, and mix at different venues. I love trying different foods and being able to learn about different cultures.

What do you like least?

I miss my family very much. I don’t get invitations from friends because they think I am always away. Sometimes it is nice to know that you are going to return home every night. Also not having enough time to visit the city where we are working.

What is your favorite day off activity?

Watching movies, enjoy the silence & nature, be with my family, play with my cats, read and sleep.

What if any obstacles or barriers have you faced? How have you dealt with them?

I had many obstacles, which I no longer remember, but surely the principle was to be a woman working in sound. For a long time, I was angry about the rejection that many colleagues had, but I realized that I was getting attention and was losing time, so I decided to ignore all the negative comments and focus on finding a mentor.

Fortunately, I found very good people along the way, who have helped me pass through all these obstacles and taught me in a professional manner to achieve my goals.

I have become more secure in my job and I learned that if you have a good attitude and confidence in what you are doing you will be fine!

Advice you have for other women and young women who wish to enter the field?

If you have a true love for your profession, do it without stopping!

Every time you have a problem do a self-evaluation and trust yourself.

Be humble but with decision and commitment, I am sure that you will achieve all your goals.

Must have skills?

Listen to other people, be objective and patient.

Favorite gear

D & B , Martin Audio , L´Acoustics, SSL LIVE , DIGICO ,MIDAS , SIM 3 Audio Analyzer, Smaart Software , Lake Processors, DPA & Sennheiser mics.

Closing thoughts

I am very happy and proud to represent SoundGirls in Mexico. I’m sure there will be many opportunities for growth and improvement for all women and men in this industry.

Infinitely thank my family (my mother and my brother) who support me in all my decisions, my boyfriend, my mentors and friends who were and are always by my side.

I have always in my mind the basic principles of The Way of Tea, harmony, respect, purity and tranquility (wa, kei, sei, jaku), this are the roots of my life.

Is very important to have an internal balance between ourselves and our work. Many times, we focus so much on our work that we forget it is very important to take care of ourselves. It is also very important to be consistent with what we do and say at all times.

Many have confused my tenacity and decision with unconsciousness, but there is a big difference in taking risks to break visible and invisible barriers to achieve your goals and objectives, always being humble and respectful with those around me and with myself.

More on Carolina

Carolina Anton on The SoundGirls Podcast

Find More Profiles on The Five Percent:

Profiles of Women in Audio