Thoughts On War and Peace and Music

Well, this month’s blog sees the start of spring which is normally a time when our attentions turn to thoughts of renewal, growth, change, and maybe a time for getting out more, even travel:

| Whan that Aprill with his shoures soote The droghte of March hath perced to the roote, And bathed every veyne in swich licour Of which vertu engendred is the flour; Whan Zephirus eek with his sweete breeth Inspired hath in every holt and heeth The tendre croppes, and the yonge sonne Hath in the Ram his half cours yronne, And smale foweles maken melodye, That slepen al the nyght with open ye (So priketh hem Nature in hir corages), Thanne longen folk to goon on pilgrimages, And palmeres for to seken straunge strondes, To ferne halwes, kowthe in sondry londes; And specially from every shires ende Of Engelond to Caunterbury they wende, The hooly blisful martir for to seke, That hem hath holpen whan that they were seeke. |

When April with its sweet-smelling shower Has pierced the drought of March to the root, And bathed every vein (of the plants) in such liquid By which power the flower is created; When the West Wind also with its sweet breath, In every wood and field has breathed life into The tender new leaves, and the young sun Has run half its course in Aries, And small fowls make melody, Those that sleep all the night with open eyes (So Nature incites them in their hearts), Then folk long to go on pilgrimages, And professional pilgrims to seek foreign shores, To distant shrines, known in various lands; And specially from every shire’s end Of England to Canterbury they travel, To seek the holy blessed martyr, Who helped them when they were sick. |

Thus wrote Geoffrey Chaucer at the start of his Canterbury Tales in the 14th Century.

Now, wouldn’t it be nice if, after more than two years of suffering Covid 19 and all that it has entailed, we could look forward to a real flowering of our humanity and our art, and be out and about more freely? The UK recently lifted its travel regulations to allow people to fly in and out of the country. But… Ci mancava solo quello, as we often say in Italy. That’s all we needed: a war! So, as nice as it would be to inhabit Chaucer’s mindset; after two years of often crushing and depressing living, adapting to life in the face of a worldwide pandemic, I ask myself: when is this all going to end?

As if all this were not enough, a combination of personal losses pushed me into a deep depression; something I’ve not really been bothered by before. As a result, I felt nothing, nothing gave me joy and, therefore, I did nothing. Well, that’s not quite true. I worked hard with the feminist group Non Una di Meno in preparation for International Women’s Day 8th March, which we called Lotto Marzo, not a day of celebration but a day of activism to remind the world that there are still many battles to fight and to win. A short clip of an event during our march in front of the main railway station of Turin, the theme, in this case, was women’s health issues.

This is our war; our fight for gender equality.

The other war: what is it for?

Carla Lonzi, in her Manifesto di Rivolta Femminile of 1970 said: War has always been the specific activity of man and his way of displaying his virility.

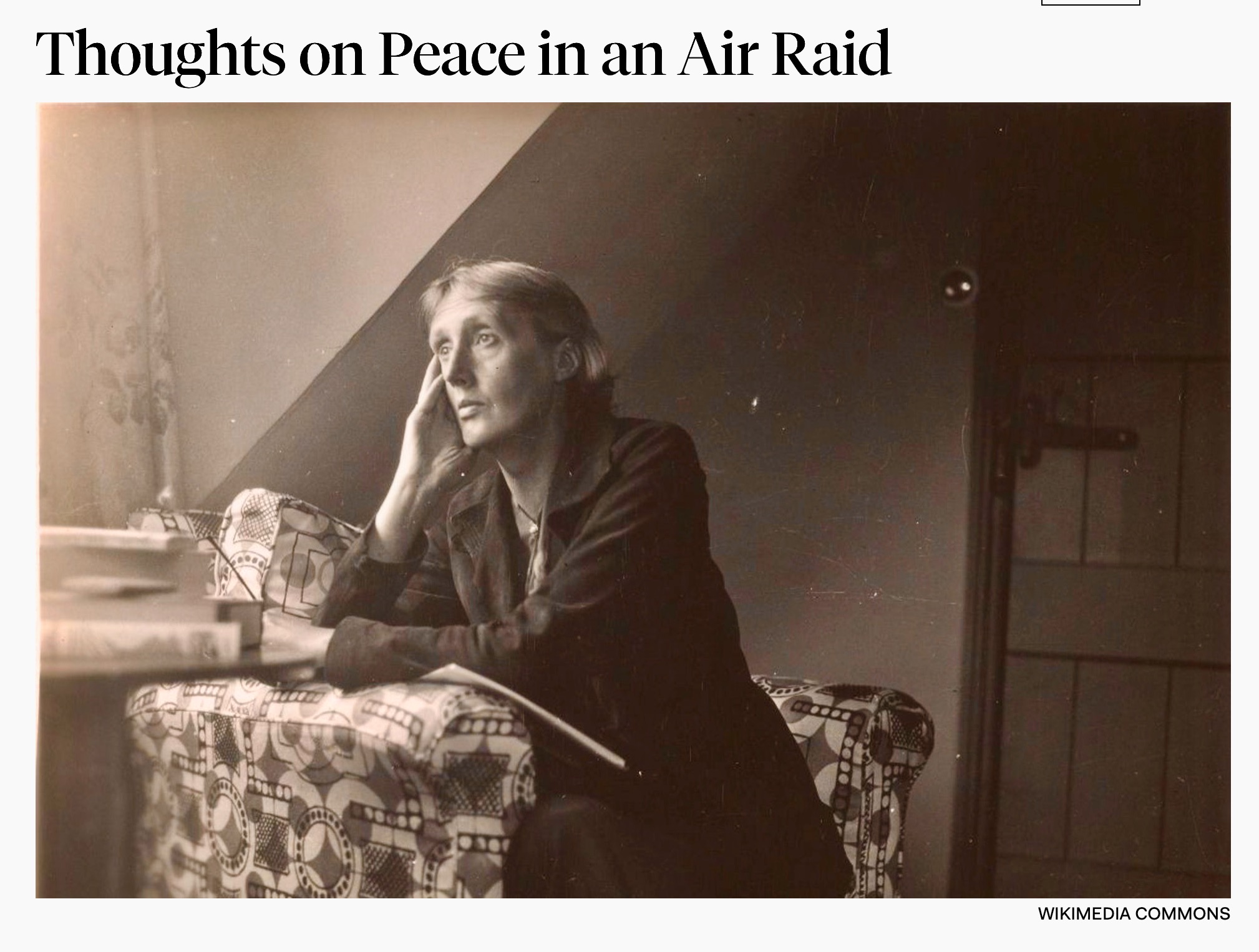

Virginia Woolf, in her Thoughts on Peace in an Air Raid, said: in The Times this morning—a woman’s voice saying, “Women have not a word to say in politics.” There is no woman in the Cabinet; nor in any responsible post. All the idea makers who are in a position to make ideas effective are men. That is a thought that damps thinking and encourages irresponsibility. Apart from a few notable exceptions, this is still the case today.

Thoughts on Peace in an Air Raid by Virginia Woolf. https://newrepublic.com/article/113653/thoughts-peace-air-raid

It’s hard to believe that one man, could have really contemplated a war in Europe after all the slaughter, bloodshed, and suffering of two world wars. It really is beyond belief.

I’ve just started listening to Apple music and came across Górecki’s Symphony of Sorrowful Songs, which I hadn’t listened to in a long time. Now I admit that I am often easily moved to tears by things, but it was just the intersections of what I’m seeing and hearing in these last six weeks that got me, yet again. We are lucky to be women, working in music, which is such a powerful life force. On the other hand:

The man that hath no music in himself,

Nor is not mov’d with concord of sweet sounds,

Is fit for treasons, stratagems and spoils;

The motions of his spirit are dull as night,

And his affections dark as Erebus:

Let no such man be trusted…

From The Merchant of Venice – William Shakespeare

Turin has had a sizeable Jewish community. Around the streets of the center, we often see these brass plaques set into the pavement outside the entrances of where these people used to live. You’ll notice that they are slightly raised so that you cannot fail to notice them. They are in memory of the Jews who were taken from their homes in Turin and deported to Auschwitz in Poland; as an aside, Poland has been taking its share of refugees from neighboring Ukraine. Anyway, look closely at what happened to the Valabrega family: mother and father were assassinated within two months of being in Auschwitz, while their daughter Stella, who was only 20 years old when she was deported, survived. I shudder to think how a young woman in such dire circumstances would have managed until the end of the war. I mean, is this really happening again?

Back to the Symphony of Sorrowful Songs, and I remember when working in a Primary School in Oxfordshire, using part of the third movement, which is beautifully sung by one of my favorite sopranos, Dawn Upshaw, as a dance movement for a very talented young girl.

This very short text held such significance for me as I leave my ‘Black Dog’ and come into the light:

Mamo, nie płacz, nie.

Niebios Przeczysta Królowo.

Ty zawsze wspieraj mnie.

Zdrowaś Mario.

No, Mother, do not weep,

Most chaste Queen of Heaven

Support me always.

“Zdrowas Mario.” (*)

The text is a prayer inscribed on a wall of cell no. 3 in the basement of “Palace,” the Gestapo’s headquarters in Zadopane; beneath is the signature of Helena Wanda Blazusiakówna, and the words “18 years old, imprisoned since 26 September 1944.”)

(*) “Zdrowas Mario” (Ave Maria)—the opening of the Polish prayer to the Holy Mother

Yes, this one moved me.

https://open.spotify.com/track/4iCn374fZg5UbGD1xFNo1m?si=8c599225c4264cf6

The good news is that Helena survived, she was rescued by a group of partisan fighters who took her into the hills and reunited her with her grandmother. She later married and had five children… I just couldn’t find out what happened to her

And what about my own personal war, or it seems like that, nine months after separation from my Mexican girlfriend, having arrived back from Mexico with just four suitcases, it still feels like a battle to free myself from the memory of this manipulative control of me and my life. Over these last nine months, those brilliant writers of the Autostraddle website have helped me understand and accept the new realities. I came across this in one of the articles and it chimed with me…

I loved her. We built a world together. When we broke up, that world disappeared.

I think about this all the time. How a relationship is its own time zone. How we build worlds with the people we love, and we are the only people who inhabit them. And when something ends, those worlds disappear. It’s not like love is a static place we bring new lovers to every time we feel it. Love is a creation that occurs between the people feeling it. To love someone new is to agree to travel somewhere that doesn’t exist yet together. Love is a brand-new place we choose to go every time.

This also helped…

So, all of the sadness of this last month has contributed to getting little music done, but it also made me rethink my projects. I had talked myself out of the Song Cycle since I didn’t quite feel ready for such a huge undertaking and started rethinking the instrumental piece since I had already a bit of material prepared. However, the unforeseen happened: I awoke one morning after having just dreamed of the poet Sylvia Plath. I was supposed to be taking her photograph while she was sitting casually on the arm of a large armchair wearing a dark olive green cocktail dress. At this point, I have to declare that I knew about Sylvia Plath and had read a few of her poems, but I had no idea of what she looked like. My dream Sylvia had a small petite face, slightly pointed chin, and short hair. So, my first mystery question is, why Sylvia Plath? Anyway, I had to rearrange some cushions behind her, and when I was in front of her, she looked at me and kissed me on the lips, I kissed her back; it seemed the appropriate thing to do. And the dream ended there. When I looked for photographs of her, I found this one which is more or less as she appeared in my dream. So, my second mystery question is how could I dream of her likeness if I had never seen her before?

Anyway, I felt pleased and flattered that she had resolved my lack of motivation after a month-long bout of depression. Since Sylvia Plath kissed me, I felt honor-bound to set one of her poems. I’ve chosen Edge written six months before she sadly took her own life; she was only 30.

As a long-term sufferer of ADHD, I oscillate between procrastination and impulsivity. That being the case, it began to dawn on me that I might do better working in a different way.

Anyone who has read my very first blog will know that returning to create Experimental music after 40 years of being busy with life, was a daunting prospect for me. Reading the posts and seeing what my sisters are doing, gives me ‘imposter syndrome’ big time. Especially since my technological capabilities in music production were forty years behind. So, as I create, with a different artistic sensibility, aware of how tastes and style have changed in my chosen field, and as I try to get used to and manage the newer and more complex technologies, I face a dilemma. Do I work through the many videos on Reaper, Logic Pro, or whatever? Or do I avoid my tendency for ADHD-induced paralysis and start the creative process of working out how that pig of a DAW works as I go along?

And the answer is…Edge ILYSP. I so much want to create this loving piece for a special person who was taken from us far too young. So that’s what I’m doing. Logic Pro will yield her secrets as I go along, asking her very gently from time to time, how do I…? Or I can use the ‘press all the buttons until something happens’ method; the ‘undo’ button is conveniently placed at the top of the Edit panel.

I can report a minor success yesterday, as I tried to combine two tracks into one (regions as Logic Pro tells me). In Audition, I would have selected them and then a ‘mixdown’ to a new audio clip. But Logic Pro is a child of Apple and likes to do things her way and in her language. ‘Track stack’ didn’t work for me, and I still don’t know what it means, but having heard someone else talk about bouncing tracks, I thought I’d give it a whirl. And yes, she was kind to me: gave me a new combined track, leaving the two originals in place.

…it began to dawn on me that I might do better working in a different way.

Yes, and this was it: start the creative process, because all the software, plugins and modular synthesizers have no point unless they allow me to be authentic in the creative process, and what could be more authentic than lovingly enshrining in music, the writing of the poet who had just kissed me? So, since I know in my head that which I seek, I have to seek the ways to create it and, as so often happens during experimentation, we find something else that is better or can be combined or helps us understand: where to next…

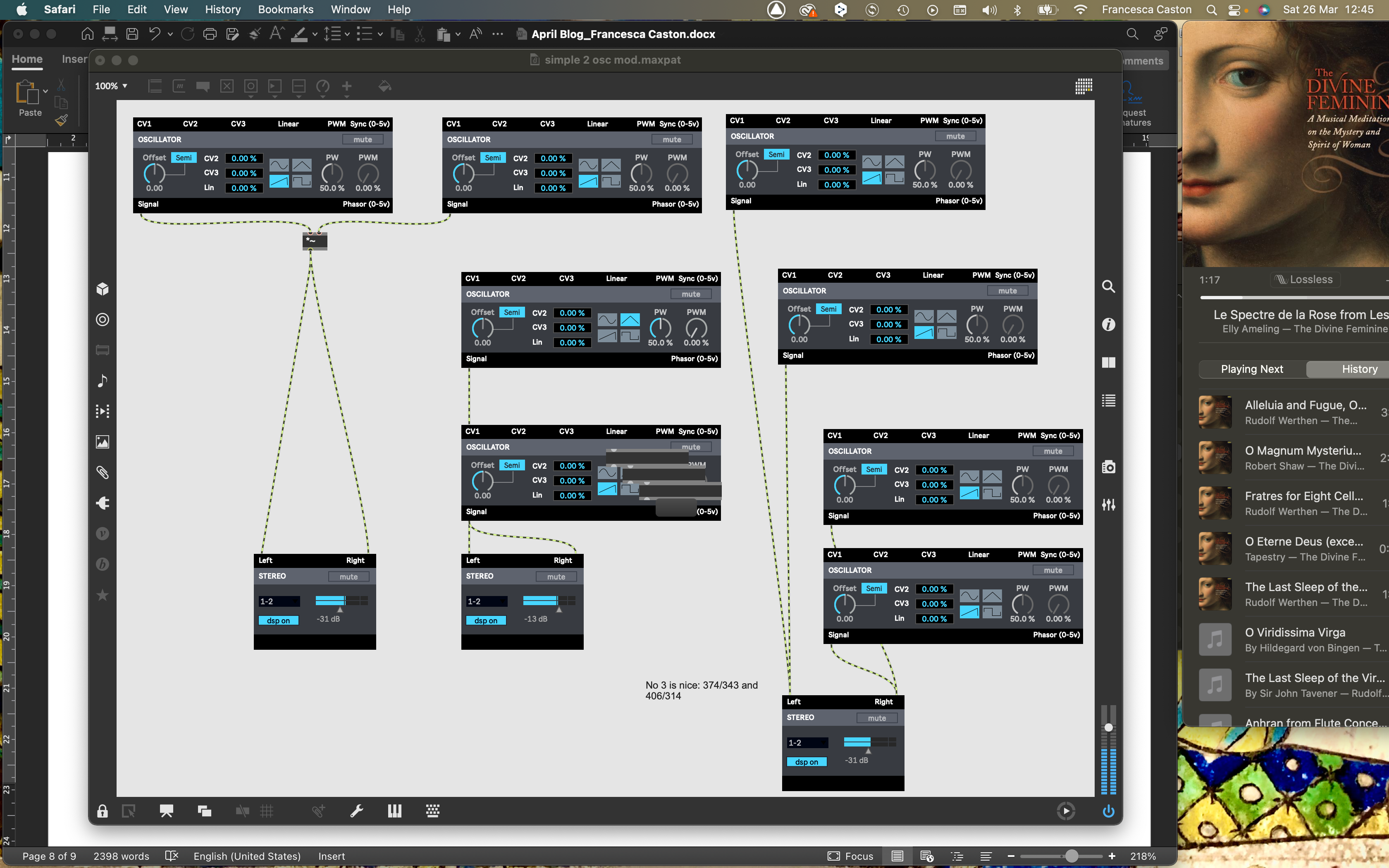

One example is my experimentation with MAX MSP. Although I don’t remember the detail, these BEAP modules are virtual versions of the EMS VCS 100 modular analog synthesizer I used in the University of East Anglia’s recording studio.

I decided that I wanted some simple electronics and thought that a couple of sine wave oscillators would give me sounds that were not too dense. So, I patched together three variations of pairs of sine wave oscillators, experimented with settings to create frequency modulation at various registers, and then recorded them on three separate tracks in Logic. Through further experimentation, I now know that I could combine tracks 7 and 9, using automated volume and panning to create shifting sounds within the drones. Track 8 proved less suitable for this piece – this is the middle patch of the MAX MSP patcher. since oscillator 1 feeds directly into oscillator 2 the changes are more dramatic. Incidentally, I shall probably rerecord the FM oscillator tones, but at least I do that with a better idea of what I want to achieve and what is possible. The third patch on the right proved to be my favorite: four oscillators in pairs, each going into the left or right of a stereo output gave me a lot of variation in gentle steps, which I like. For further experimentation, I may want to pass the sine waves separately or in combination through a filter (maybe a notch filter) to modify the sounds further, making changes as I record. As good as Max is it would be very difficult to control in live performance through a trackpad for example, though I have seen some artists using other triggers.

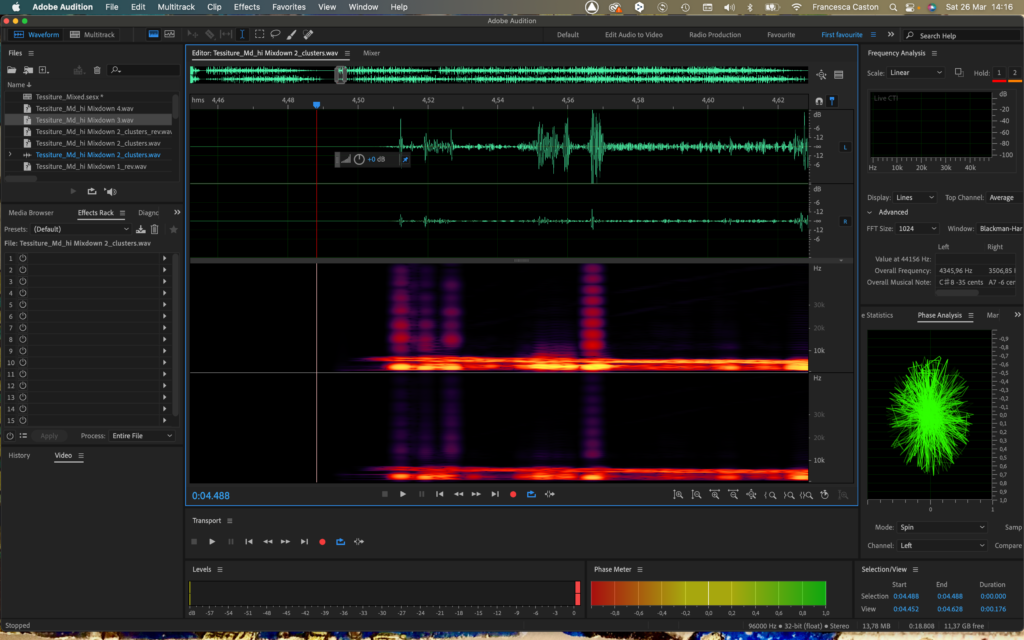

The Logic Pro screen illustrates to an extent, the use of a DAW in the compositional process. In respect of composition, especially with .wav audio sources, my first love, Audition is intuitive and gives me the sensation of working with tape back in the late 70s; it even has a virtual razor blade and, just like the tape days you can move the tape head back and forth by hand until you hear (as well as see now) the point at which you want to splice; Logic Pro doesn’t allow this, or at least I haven’t found a way to do this yet. However, in the absence of getting my hands on a real chamber organ, Logic pro has a remarkably good midi version. I chose the Baroque organ in preference to the others for my ostinato since it has a nice ‘reedy’ sound and I thought it sounded a little out of tune at one point, which I like.

This next screenshot, in praise of Audition, shows one of its strengths at the stage of treating audio samples. This is a separate waveform editor (I’m sure that Logic Pro has one hidden away somewhere, waiting to be discovered) with the waveform above and the spectral view below. I’ve zoomed in enough to make a splice and you’ll notice that the play head is a little way ahead of the waveform and yet, as I move it backward and forward by hand, I can hear the first sound, so this is where I could splice, for example. You’ll notice at the top left-hand corner that you can switch between waveform editor and multitrack view very easily. So, for processing physical sounds this would be my preferred option still.

So, in answer to my question from my first blog in January: I’ll be writing about trying to juggle working between Audition, Reaper, Max MSP and maybe Logic Pro… or is it ‘make your mind up’ time? It looks as if I have settled on both Logic Pro and Audition, even if I am still undecided about which to use for the final mix, with MAX MSP for sound synthesis.

So, despite April’s sweet showers engendering growth and renewal, we are still stuck with unresolved Covid 19, the senseless war in Ukraine: ci mancava solo quello! Non Una di Meno’s war with the patriarchy will be a long one: as Virginia Woolf said: All the idea makers who are in a position to make ideas effective are men. That is a thought that damps thinking and encourages irresponsibility. We in NUDM just think that men shouldn’t be in charge of so many important things, especially if it involves armaments. Then there is my own personal battle to move on after personal losses this is gradually resolving itself, and dedication to creating music helps a lot.

“You know, a heart can be broken, but it still keeps a-beating just the same.”

Fannie Flagg, Fried Green Tomatoes at the Whistle-Stop Café. I tell myself this regularly and yes, it’s still a-beating.

So, just like the weather in Turin which is looking sunny and spring-like for the next few days, I’ll put these darker thoughts behind me and indulge myself in my musical setting of Edge.

I love you, Sylvia Plath.

And let’s not forget that Helena escaped from the Gestapo’s headquarters in Zadopane and that her indelible mark inspired a remarkable piece of music.

Love to you all from a sunny Torino

Frà